Older Versions of Dual Render Fusion

Disclaimer

Alongside Snapdragon Spaces SDK for Unity version 0.13.0 to version 0.21.0, Dual Render Fusion public beta was available as an Experimental package.

Dual Render Fusion is available as an integrated part of the Snapdragon Spaces SDK from version 0.23.0 onwards, and it is recommended that new projects should use this integrated version of the Dual Render Fusion (Experimental) feature.

The information contained in this document is out-of-date for more recent versions of the feature, starting from version 0.23.0.

See the Dual Render Fusion Setup Guide for up-to-date information about how to set up a new project using Dual Render Fusion.

For information about migrating a Dual Render Fusion project from the old add-on package (from version numbers between 0.13.0 and 0.21.0) to the newly integrated feature, see the Dual Render Fusion Migration Guide.

Prerequisites

Please note that the recommended Unity Editor version to work with Snapdragon Spaces is 2021.3 LTS (starting with 2021.3.16f1). Later versions of the Unity Editor have not been extensively tested and may not work as effectively.

The Android Build Support module must be added when installing the Unity Editor to be able to export .apk files. The module can also be added afterward through the Unity Hub.

Start with a 3D Unity Project

Create a new 3D (or 3D URP) Unity Project, or use an existing Unity project.

If converting a project already built using Snapdragon Spaces, backup the project and head straight to Import the Dual Render Fusion package.

Import the Snapdragon Spaces package

The Snapdragon Spaces for Unity SDK comes as a package in the form of a tarball file.

Please follow the Unity instructions to import the package into the project using the Unity Package Manager by adding the tarball (.tgz) file located in the Unity Package folder.

To have the package listed with a relative path (better for version control) instead of an absolute path, copy the .tgz file into the project's Packages directory and then add it from there.

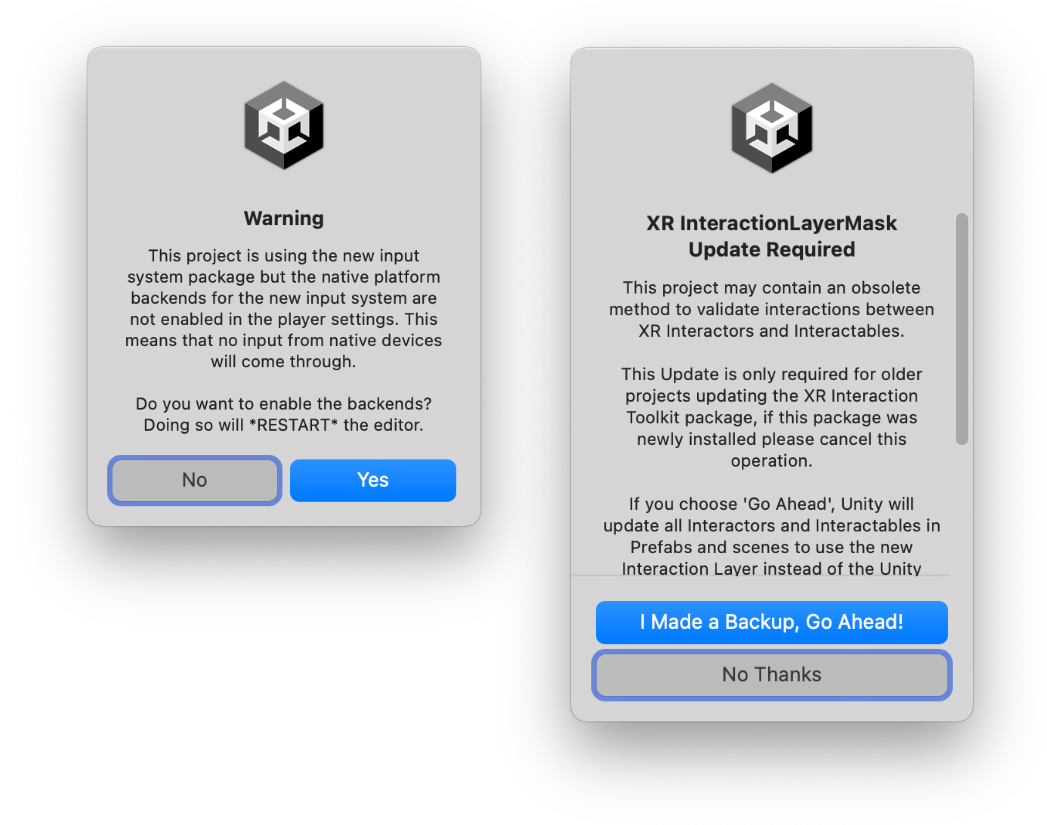

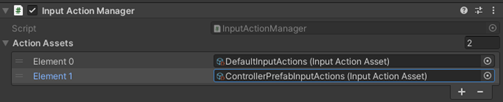

After importing the package, Unity might show a prompt to enable the new input system. Click Yes to ensure full functionality with the OpenXR and XR Interaction Toolkit packages. If the old input system is needed in addition, the Active Input Handling value under Player > Other Settings > Configuration can be set to both.

Import the Dual Render Fusion package

From Snapdragon Spaces SDK for Unity version 0.13.0 to version 0.21.0, Dual Render Fusion public beta is available as an Experimental package. The files to enable this feature are provided as a separate Unity Package tarball available at the Snapdragon Spaces for Unity SDK download page.

Follow the Unity instructions to import the package into the project using the Unity Package Manager, adding the tarball (.tgz) file located in the Dual Render Fusion Package download.

It is also recommended to import the Fusion Samples at this time through the Package Manager.

Change the Project Settings

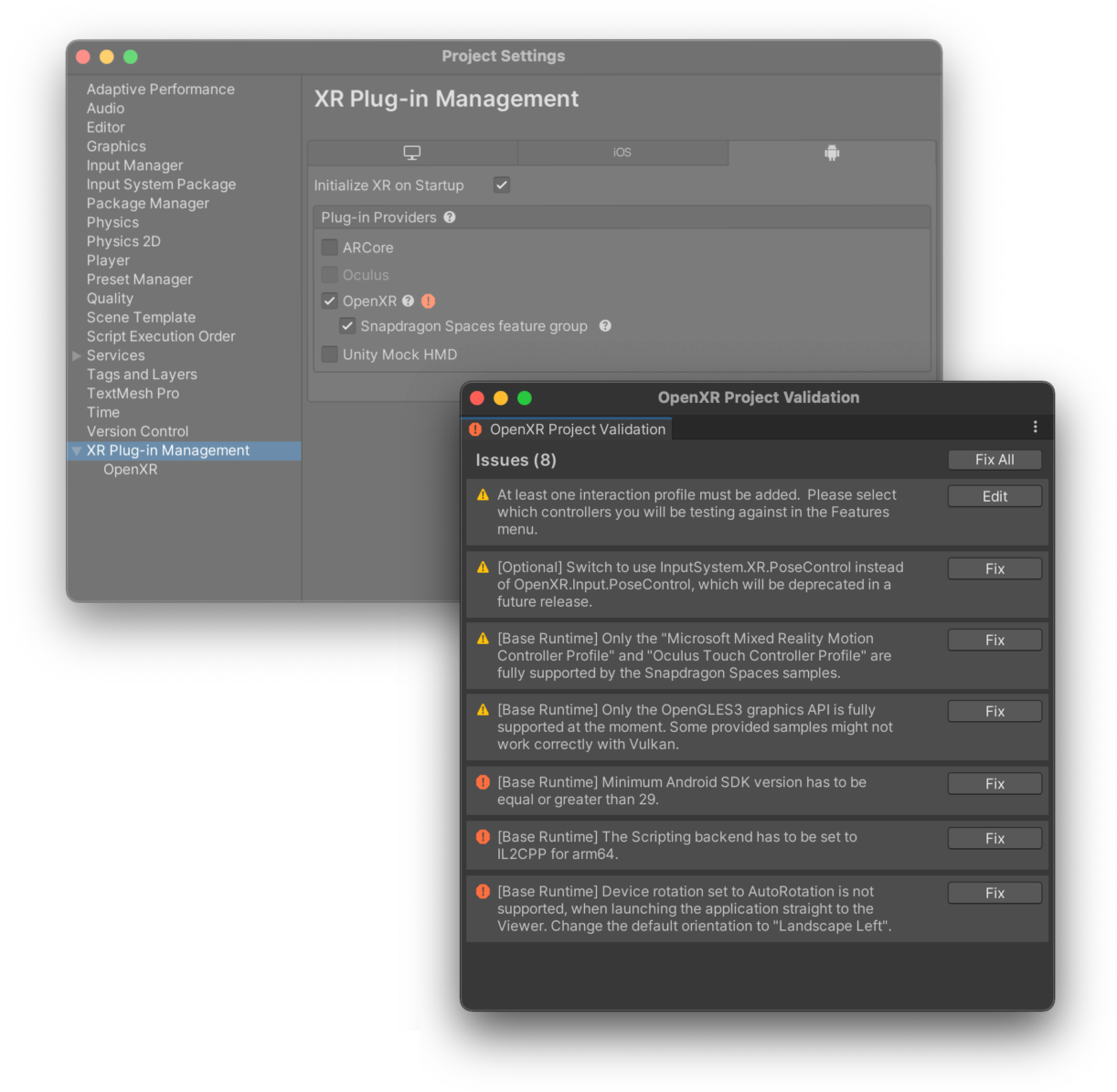

To enable the Snapdragon Spaces OpenXR plugin, navigate to the project settings under Edit > Project Settings > XR Plug-in Management and open the Android tab.

Check the OpenXR plug-in but do not check the Snapdragon Spaces feature group yet.

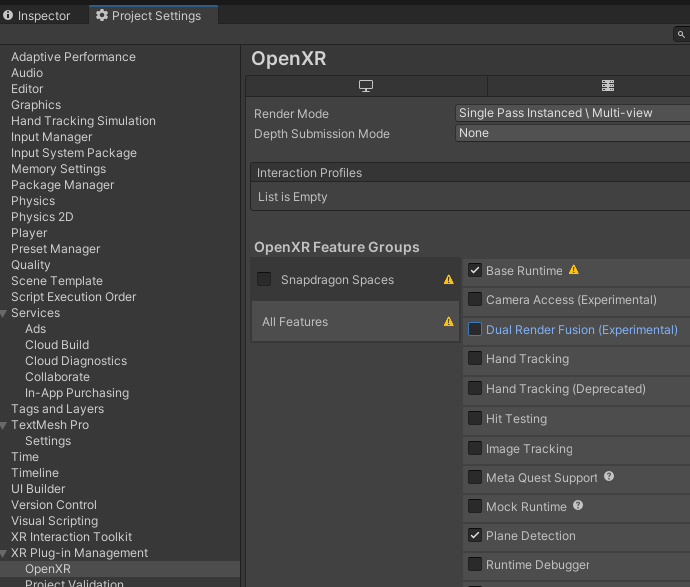

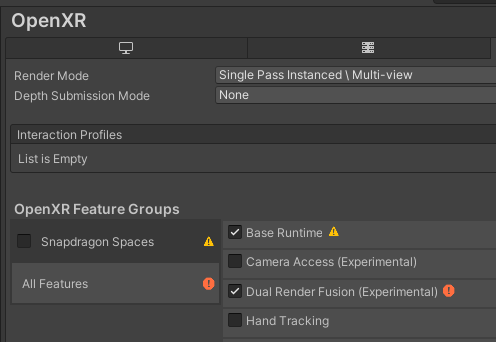

Instead, click on the OpenXR menu item to show the different OpenXR feature options. Note the new Dual Render Fusion Feature, and check the Base Runtime Feature and the Dual Render Fusion Feature as shown. Click on the red exclamation point next to Dual Render Fusion to open the Project Validator.

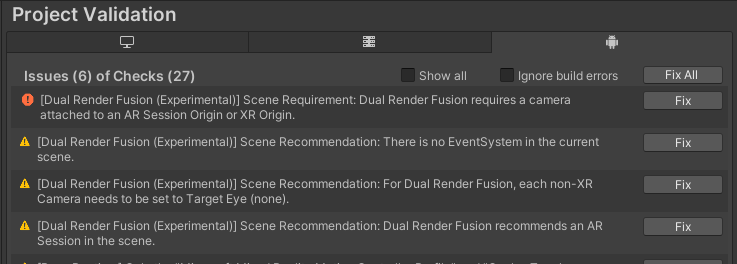

The Project Validator will show several Fix options for updating both the Unity Project and the currently open Scene, so ensure that the correct Scene is open in the Project.

Note the Validator options with prefix [Dual Render Fusion (Experimental)]. These should be resolved first to update the Project and open Scene before selecting Fix All for the remainder of the Project. (This is because the Validator options for Dual Render Fusion will resolve some of the other default Spaces Validator issues).

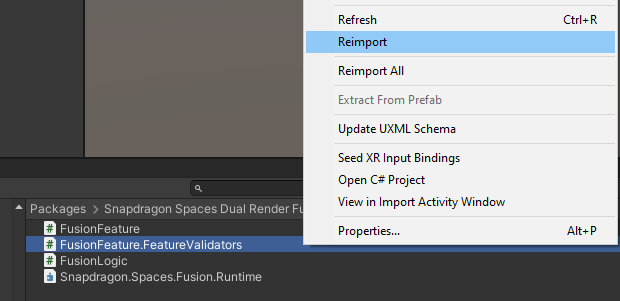

If the Dual Render Fusion is showing an exclamation mark but no related Project Validator options appear, reimport the Fusion Feature Validator script in Packages > Snapdragon Spaces Dual Render Fusion (Experimental) > Runtime > FusionFeature.FeatureValidation to fully re-process the project validation files.

When done with the Dual Render Fusion validations, click on the Fix buttons next to the remaining entries to apply any needed project settings. Some fixes may require a restart of the Editor, which is normal.

At this point, Dual Render Fusion should be properly enabled for launching. See below for additional information and advanced configuration features.

Configure Dual Render Fusion Settings

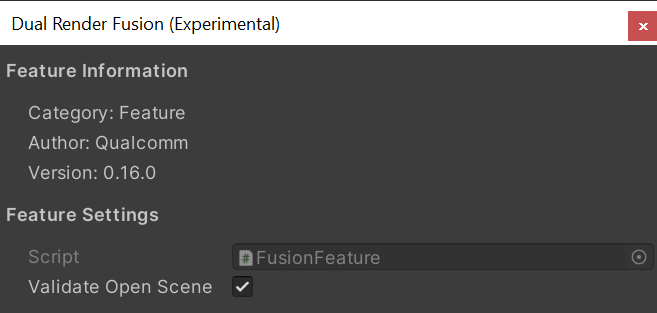

Available settings for the Dual Render Fusion feature include:

- Validate Open Scene - when this setting is enabled, the Unity Scene Validator will check if there is an XR camera and a smartphone camera properly configured in the open scene. Disable this setting if the project's build system doesn’t have a full-feature scene (like an additive scene) to avoid build blockers.

Dual Render Fusion Samples

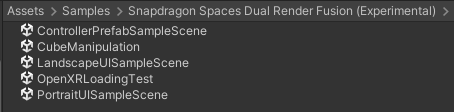

A series of sample scenes and sample code scripts are available through the Package Manager.

Controller Prefab Sample Scene - a sample scene used for adapting the Android Companion Controller for Snapdragon Spaces into a Unity Prefab, with full control over the Settings and Exit button. See Companion Controller below for more details.

CubeManipulation - a simple scene with a cube that can be moved using one, two, or three fingers on the touchpad as a helper tool for creating a responsive multi-touch interface.

LandscapeUISampleScene - a simple scene with a Landscape Canvas containing interactable Canvas UI elements to manipulate a cube.

OpenXRLoadingTest - a scene demonstrating allowing an app to start up immediately on the smartphone and then enabling OpenXR upon establishing a connection with the glasses. See Dynamic OpenXR Loader below for more details.

PortraitUISampleScene - a simple scene with a Portrait Canvas containing interactable Canvas UI elements.

Companion Controller

Some developers will want to migrate existing Snapdragon Spaces projects to use Dual Render Fusion and take advantage of the Companion Controller capabilities of gyroscopic 3DOF pointing. Follow these steps to add this to a project.

To replicate the Companion Controller in Unity, several Assets are needed as well as a few scripts.

- If needed, import TextMeshPro whenever prompted.

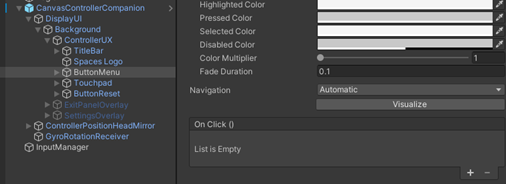

- Drag the CanvasControllerCompanion Prefab from the Samples into the Hierarchy.

- Two GameObjects will need to be hooked up to the XR Camera since that is not part of the prefab. If these are not set, the XR Origin or AR Session Origin will be autodetected to use the attached Camera. Alternatively, they can be set manually here:

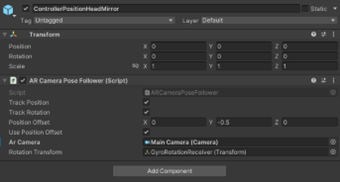

In the ControllerPositionHeadMirror, under the Ar Camera field.

In the GyroRotationReceiver, under the Ar Camera field.

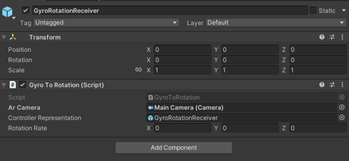

- Ensure that the Input System is set to Both to enable the gyroscope controls:

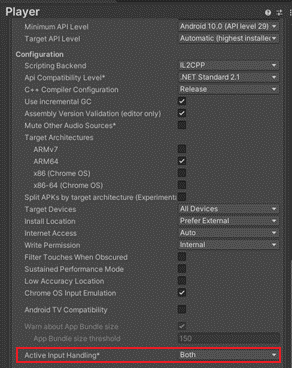

- Add ControllerPrefabInputActions as an additional Action Asset to the Input Action Manager. If there is no Input Action Manager in the Hierarchy, create a GameObject and add the Component.

- To utilize Menu button functionality, it is possible to just use the standard Unity Input System and add an On Click() event to the Button object in the Hierarchy representing the Menu Button.

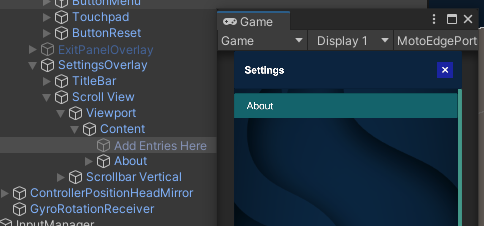

- To add more options in the Settings Menu, temporarily turn on the Settings Overlay GameObject and add menu items to the Scroll Box list. (Currently, the “About” button does not have functionality and is merely there as an example.)

Dynamic OpenXR Loader

The Dynamic OpenXR Loader is an experimental sample script that allows the application to load OpenXR after glasses are connected, similar to the concept of how headphones work. This script simply detects the presence of a secondary display and then starts up OpenXR if configured to do so. Several callbacks are available to control the behavior of the user interface when OpenXR begins connecting. Refer to the OpenXRLoadingTest sample to see an example of how to use this script.

It is not recommended to use this feature for apps that enable the Hand Tracking feature at this time. Currently, the concurrency of the Dynamic OpenXR Loader is incompatible with the Hand Tracking feature, and using this functionality of starting OpenXR outside the app initialization process may cause the application to abruptly exit when attempting to load. This will be fixed in a future release.

When OpenXR begins connecting, the main thread is blocked for a short period of time. It is recommended to display some UI elements signaling that OpenXR is connecting, and for that element to show a confirmation state upon completion of loading.

Feature Validation Configuration

The Dual Render Fusion Feature Validator is set up to easily configure the Unity Project and selected Scene to enable Dual Render Fusion. Here is a list of the validations.

| Scope | Validation | Description | Reason |

|---|---|---|---|

| Project | Disable Custom Launcher | Disables checkboxes in Base Runtime Feature for Launch on Viewer and Launch Controller on Host | Dual Render Fusion requires Custom Launcher behavior to be disabled (see headworn architecture) |

| Scene | AR Session Origin Req'd | Ensures an AR Session Origin (or XR Origin) and associated XR Camera are in the Scene | AR Foundation requires an XR Camera |

| Scene | AR Session Req'd | Ensures an AR Session and AR Input Manager are in the Scene | AR Foundation requires an AR Session |

| Scene | Mobile Camera Req'd | Ensures a Unity Camera representing the mobile device screen is in the Scene | Dual Render Fusion requires a mobile display for rendering |

| Scene | Main Camera Tag | Ensures that only the XR Camera or the other non texture rendering cameras are tagged as Main Camera, and not both | Having two cameras tagged as MainCamera will potentially cause rendering errors or disrupt other dependencies |

| Scene | Camera Render Priority | Ensures the mobile camera renders after the XR Camera | If the XR Camera renders after the mobile camera, the mobile display will get overtaken by a copy of the XR Camera |

| Scene | Camera Eye Targets | Ensures the XR Camera Eye Target is set to Both and all other Cameras are set to None | Camera Eye Targets determine which display will be rendered to by each camera |

| Scene | Mobile Camera Eye Target (URP) | Shows a warning to check the mobile camera eye target to set to None | URP cannot programmatically set the Eye Target in the same way as Built-in Rendering Pipeline, so a warning is shown to manually check it instead |

| Scene | Camera Display Targets | Recommends adding a Fusion Logic GameObject and component if the XR Camera Target display is not set to Display 1 | Both display targets must be set to Display 1 for Dual Render Fusion to properly render to both devices. XR Camera is set to Target Display 2 by default, and "Fusion Logic" sets the target to the proper display at runtime |

| Scene | Audio Listeners | Recommends turning off one of the Audio Listeners if more than one are active in the scene | If more than one Audio Listener is present, Unity is unhappy |

| Scene | Event System Req'd | Adds an EventSystem and default input module if one is not present | This is a helper check for any touch UI to be processed |

App Backgrounding

One disadvantage of the architecture with Dual Render Fusion is that apps cannot run purely in the background with a different app running in the foreground, unlike the headworn architecture. By using Android's picture-in-picture mode, this limitation can be overcome in a different way using standard Android architecture options.