Dual Render Fusion (Experimental)

Dual Render Fusion is a new core feature for augmented reality (AR) that can be used with the Snapdragon Spaces for Unity SDK, and allows simultaneous render output to both the mobile screen and a connected augmented reality device, all in the same Android activity. This also allows full-featured use of the mobile touchscreen input along with the full perception capabilities offered with Snapdragon Spaces.

Starting from version 0.23.0 of the Snapdragon Spaces SDK for Unity , the Dual Render Fusion (Experimental) feature is included as part of the SDK. To get started with the latest version of Dual Render Fusion follow the instructions in the Setup Guide.

Between versions 0.13.0 and 0.21.0 of the Snapdragon Spaces SDK for Unity, an optional add-on package was available.

Information about setting up a Dual Render Fusion project using an older version of the optional add-on package can be found on the Version 0.13.0 to 0.21.0 page.

Information about migrating a project using an older version of Dual Render Fusion to the latest integrated version of the feature can be found in the Dual Render Fusion Migration Guide.

To view architecture information and supported platforms, refer to the Dual Render Fusion Architecture page.

Dual Render Capabilities

Dual Render Fusion sets up the smartphone as the primary display while treating the tethered AR headset as a connected secondary display.

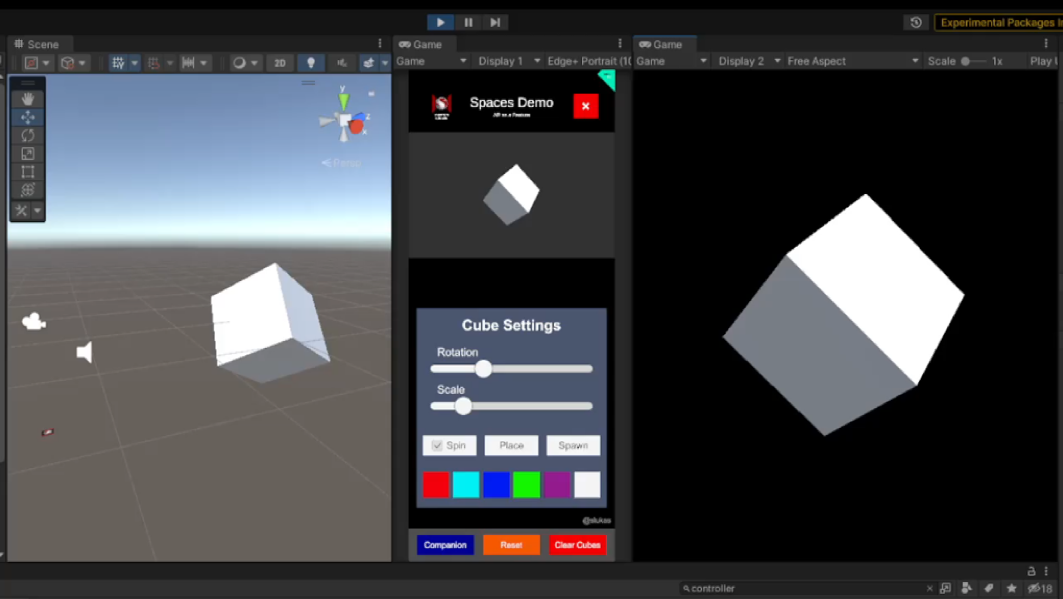

In this screenshot, the AR display is targeting Display 2 for in-editor testing, while on the device the AR display should target Display 1.

This dual render functionality is able to extend new or existing 2D mobile applications into 3D augmented reality experiences with no code required, as the core concept is adding a second in-game camera to a 2D app to act as an extended AR display.

Full Multi-Modal Input

Dual Render Fusion also benefits developers through the option to utilize available XR inputs enabled with the Snapdragon Spaces SDK (including hand tracking, raycast, and gaze pointer) while also allowing users the convenience and familiarity of the full mobile touchscreen. With multi-touch capabilities, the user interface can be as dynamic as any existing mobile phone app.

Mobile App Extension

Since the mobile phone is capable of full-featured input and rendering, adding AR is now as simple as adding a second in-game camera to handle the poses and output for augmented reality glasses. With this combination, existing mobile apps can now be seen in a new immersive way while retaining familiar control mechanisms. This enables rapid porting of existing apps and demos into Snapdragon Spaces.

Use Case Examples

Just like a mobile phone, use cases for Dual Render Fusion are limitless, as this type of feature can be used to enhance any existing mobile app type today. Below are some examples of what everyday augmented reality can look like when harnessing the natural input provided with Dual Render Fusion.

Online Shopping

With touchscreen input, UI can now be optimized for the smartphone while important elements such as world-scale furniture or clothing can be customized and viewed directly from a connected augmented reality headset.

Media Viewing

Developers can combine natural touchscreen input functionality to swipe through their favorite photos on their smartphone while moving and expanding individual photos using hand tracking in augmented reality.

Gaming

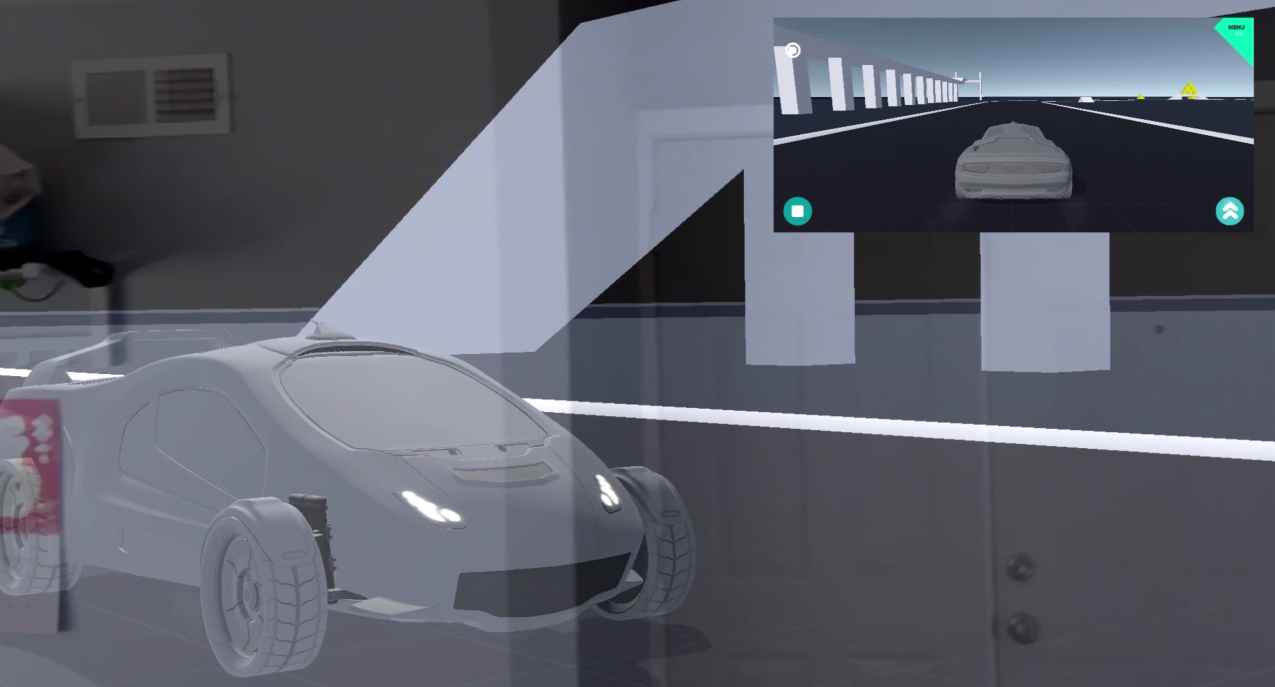

Dual Render Fusion also allows developers to use the smartphone as a full touchscreen controller for mobile games, while displaying an additional immersive view of the game action in augmented reality.