Interaction Components

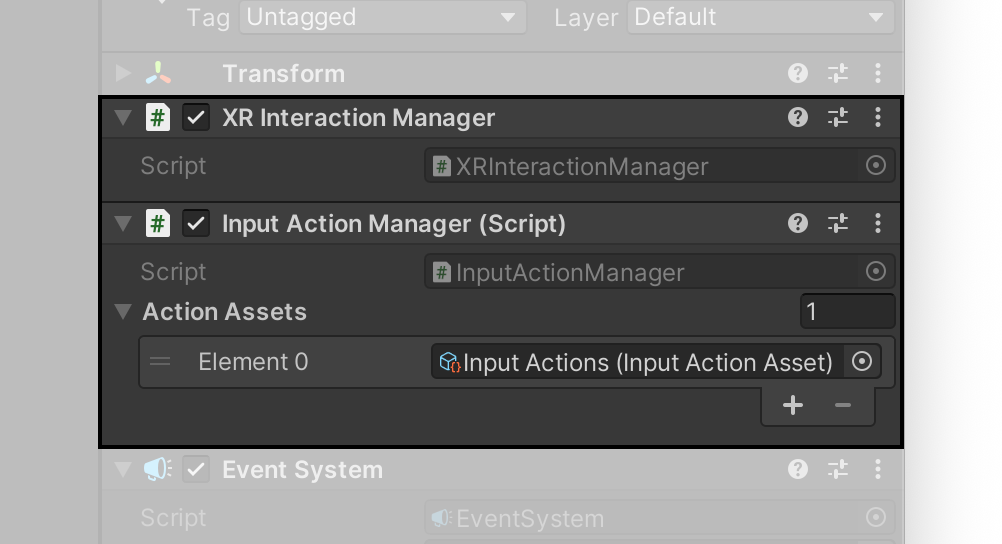

XR Interaction Manager

At least one XR Interaction Manager is required per scene in order to establish a connection between the Interactors and Interactables.

Input Actions must be enabled through the Input Action Manager. To add them manually, locate the Input Action Manager script and add an Input Action asset as an element. These assets are located in the samples path under Shared Assets > Input Actions.

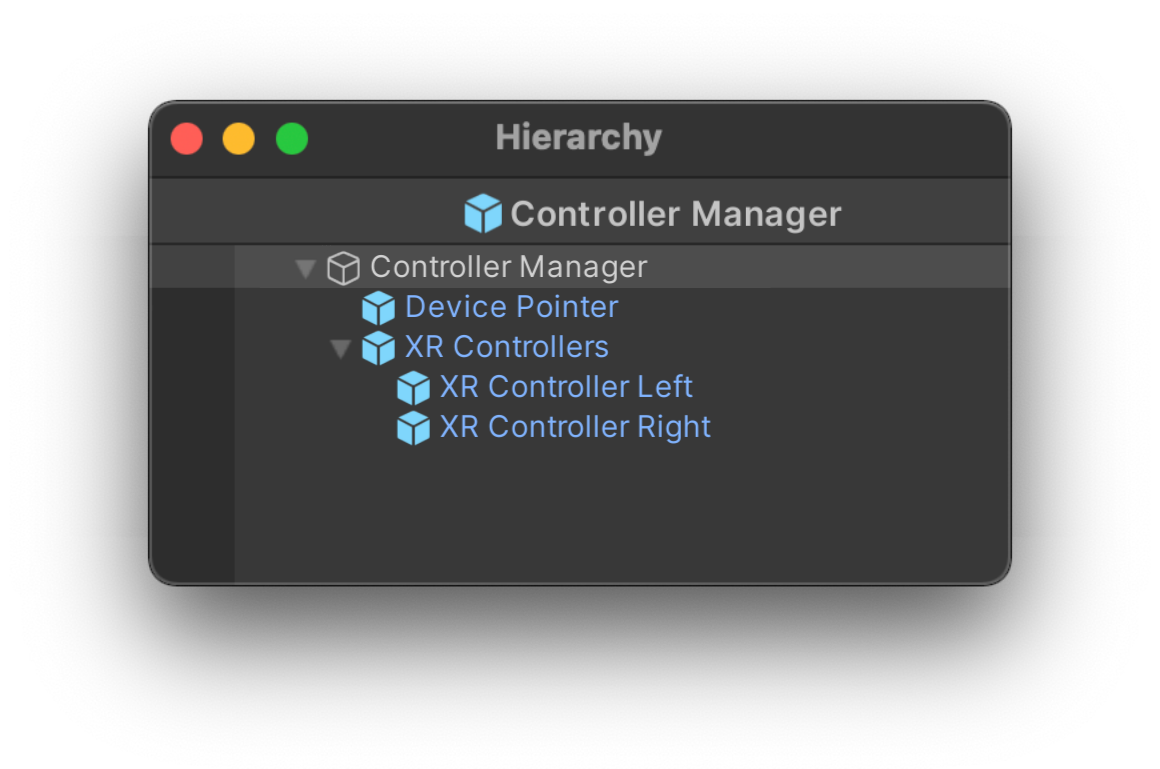

Controller Manager

The Controller Manager is the simple prefab used for interaction throughout the samples except in the QCHTI sample. It is comprised of the following GameObjects:

The XR Controller Manager will filter what XRControllerProfile is used in the application based on what InputDevice is connected. Please refer to the Unity documentation for Microsoft Mixed Reality Motion Controller Profile or Oculus Touch Controller Profile.

If the Host Controller is being used, the Device Pointer will be activated. The Device Pointer contains the Host Controller mesh and input references. If a VR device with two controllers is being used, the XR Controllers GameObject will be activated. The XR Controllers prefab contains two GameObjects for the left and right controller with an XR Controller (Action Based Controller) component with references to each controller prefab and the specific input references.

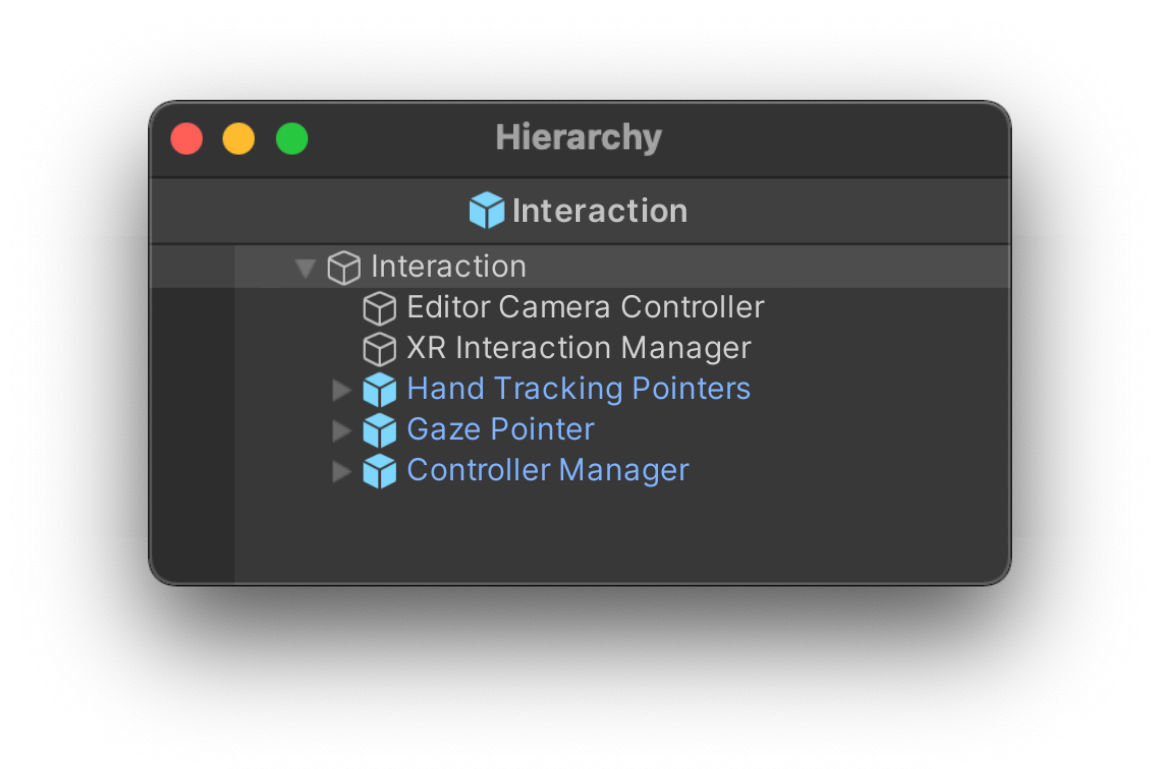

Interaction Prefab

The Interaction prefab is a more complex prefab that can be used for switching between the different interaction methods. It is included in the Snapdragon Spaces Samples and contains three prefabs for the different interaction methods currently supported. The XR Interaction Toolkit Sample scene can be used as an example of how to use this prefab and it's components.

The Interaction Manager component is in the root GameObject of this prefab. It contains the methods for the different interaction input modalities as well as the code that handles input interaction switching.

For AR Devices, the default configuration will start with Hand Tracking and fallback to the Gaze Pointer if the QCHT package is not included in the Unity project or if Hand Tracking is not compatible with the target device. Otherwise it will switch from Hand Tracking > Gaze Pointer > Device Input.

For VR/MR Devices, if a controller is tracked, it will automatically start with XR Controllers as the default input modality. Otherwise it will start with Hand Tracking as the default input modality. The Automatic Controller Switch component in the Interaction prefab detects the controller tracked status and automatically disables Hand Tracking when a controller is tracked or enables Hand Tracking when there are no controllers tracked. The user can switch between XR Controllers/Hand Tracking and Gaze Pointer input modality pressing the Menu button on the left controller.

Refer to QCHT Hand Tracking for related documentation.

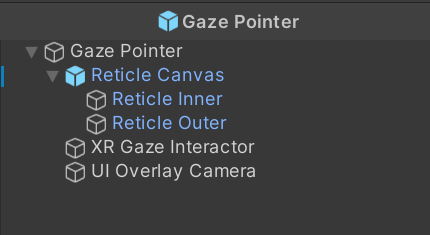

Gaze Pointer

The Gaze Pointer prefab is comprised of the following GameObjects:

The Gaze Interaction UI component is added to the XR Gaze Interactor GameObject. It manages the interaction with the XR Gaze Interactor component with UI objects in the scene as well as the timer duration and "click" functionality of the pointer.

Currently the gaze pointer can only interact with UI objects in the scene with a XR Simple Interactable component, unlike the Pointer Controller, which can interact with both UI and 3D objects.

The XR Gaze Interactor prefab also has a Spaces Composition Layer component. See Unity Gaze Pointer Composition Layer Sample for documentation about using composition layers to render view locked content.

Input Cheat Sheet

Buttons used for input actions:

| Host Controller | XR Controller Right | XR Controller Left | |

|---|---|---|---|

| Select | Tap on Trackpad | Right Trigger Button | Left Trigger Button |

| Gaze/Pointer switch | Menu Button | None | Left Menu Button |

| Touchpad | Trackpad | Right Joystick | Left Joystick |

| Anchor Position Confirmation | Tap on Trackpad | Any Trigger Button | Any Trigger Button |

Controller Haptics

Controller haptics are sent via the Interaction Manager's SendHapticImpulse function to the XR Controller Manager. When SendHapticImpulse is called, a haptic impulse is triggered on both the Host Controller and XR Controllers on UI button press or when scrolling.

Haptic feedback is felt on both XR controllers at the moment, regardless of which one triggers an action.

Controller Animations for XR Controllers

Each XR Controller in XRControllers has a reference to their XR Controller prefab containing the controller mesh with the buttons' blend shapes.

Each prefab has an XR Controller Input Animation attached. This script will update the blend shape weight values with the values received of each button of the controller, creating the button animations.

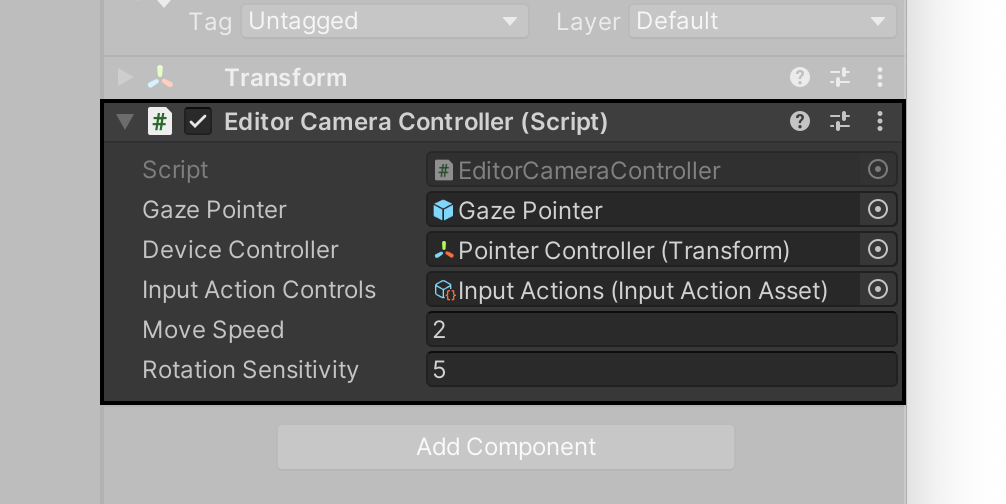

Editor Camera Controller

While build times can be quite time consuming, the editor camera controller allows for quick testing within the Unity editor itself and provides a key shortcut to switch between the gaze pointer and the controller. The key to switch is output in the Editor console.

XR Device Simulator is also compatible with the Snapdragon Spaces samples for Editor simulation of interactions.

Spaces Hand Tracking Button Binding

Each button, toggle or slider prefab in the XR Interaction Toolkit Sample has a Spaces Hand Tracking Button Binding component to disable unnecessary XR Simple Interactable components and Snapping Volumes for other interaction methods than Hand Tracking.

Interaction Packages

The Snapdragon Spaces plugin works great with a number of interaction packages. Please refer to the specific pages for details on how to get started with those: