Hand Tracking Sample

This sample demonstrates how to enable the Hand Tracking feature, how to use the returned data of the SpacesHandTracking feature and how to enable hand interactions. In order to use this feature, it has to be enabled in the Snapdragon Spaces plugin settings located under Project Settings > Snapdragon Spaces plugin.

Make sure the OpenXRHandTracking plugin is disabled so that the standard Hand Tracking does not override the Spaces Hand Tracking.

How the sample works

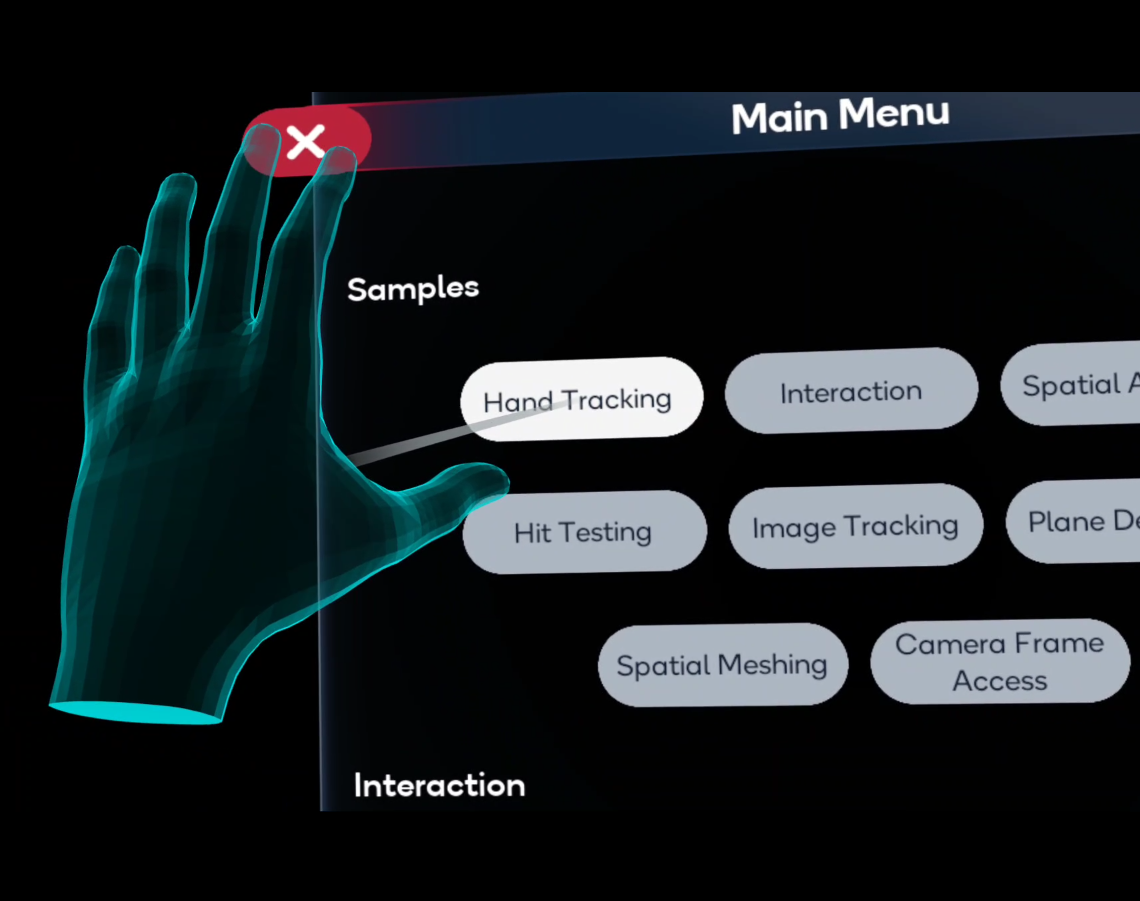

When the sample is opened, the Hand Tracking starts when the user puts the hands in the field of view of the headset cameras. The user will see the meshes representing each one of the hands. If Hand Tracking is active as the input mode, the 3D actors in the scene will be interactable. Different interactable actors will be spawned in the scene depending on the selected interaction option.

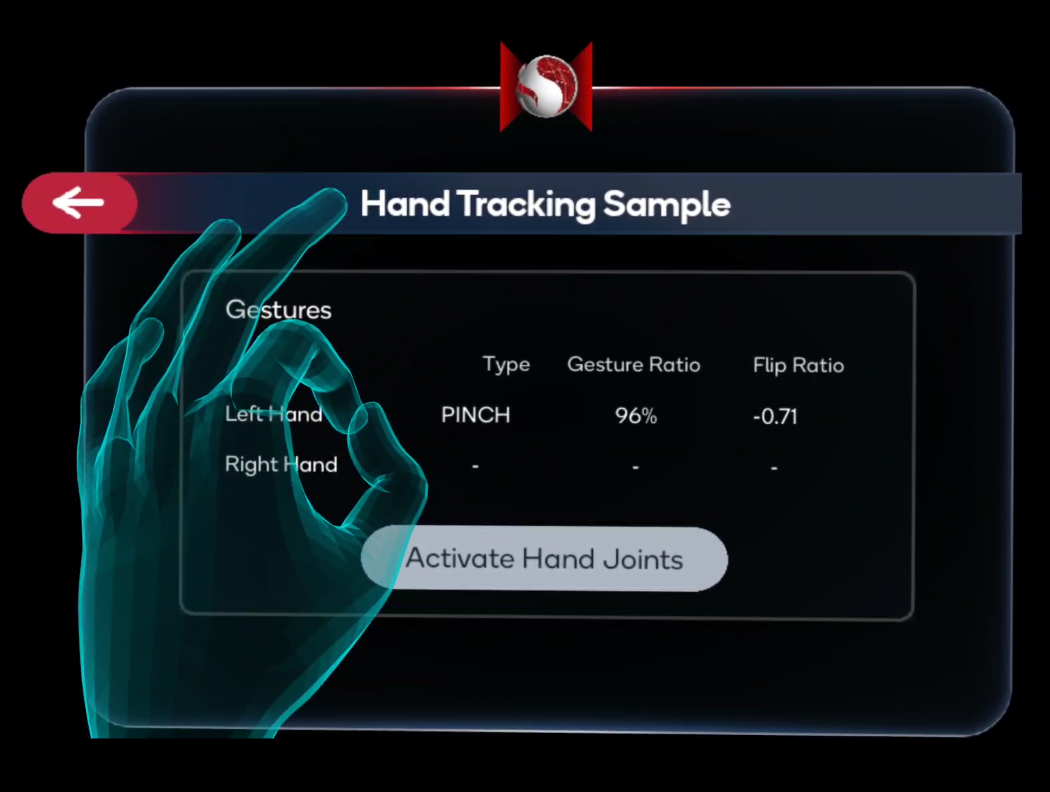

The following image shows the hand mesh.

Hand Tracking Manager

The sample uses the BP_SpacesARManager_HandTracking blueprint asset (located under SnapdragonSpacesSamples Content > SnapdragonSpaces > Samples > HandTracking > Placeable), which is in charge of controlling the different interaction options available in the scene, which are distal and proximal interactions.

To enable and disable Hand Tracking, the Toggle Spaces Feature method must be used with Hand Tracking as the feature to enable. With this method there is the possibility to start and stop hand tracking on demand in parts of the project when hand tracking is not needed.

In Snapdragon Spaces versions 0.13.0 and 0.14.0, there was another option to enable Hand Tracking. This was "Use hand tracking from start", which could be found in Project Settings > Plugins > Snapdragon Spaces > General > Hand Tracking.

Hand meshing is enabled using Toggle AR Capture with Hand Meshing as the capture type. It can also be enabled using Toggle Spaces Feature.

For versions prior to Snapdragon Spaces version 0.12.1, hand meshing was enabled by using Set Hand Mesh Status. Visibility of the mesh was managed by checking the status with Get Hand Mesh Status.

The two hands are represented as Motion Controllers and the data can be obtained via Get Motion Controller Data to get access to the hand joints positions and rotations.

For versions prior to Snapdragon Spaces 0.15.0, the hand tracking manager was in charge of rendering the hand joints using the Motion Controller Data, this has been removed to give more emphasis to hand interaction. The functions that were used to draw the joints are still available inside the hand tracking manager blueprint, where Draw Joints is used to display the hand joints. The corresponding information is extracted from Motion Controller Data, specifically the location and rotation of each hand joint, to correctly place the representation of each joint. The actor BP_SpacesHandJoint_Visual is used for this representation.

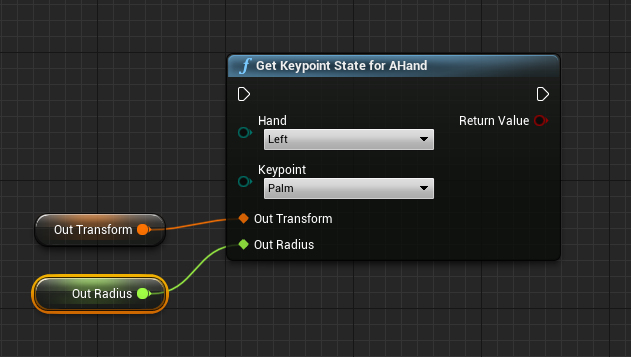

To get a hand joint transform, use the function GetKeypointStateForAHand. GetKeypointStateForAHand has the parameters EControllerHand and EHandKeypoint, where EControllerHand is the hand (right or left) and EHandKeypoint is the joint, and has references the transform of that hand joint and its radius. GetKeypointStateForAHand will return a Boolean value that indicates if the hand joint has been found or not.

Hand Meshing Viewer

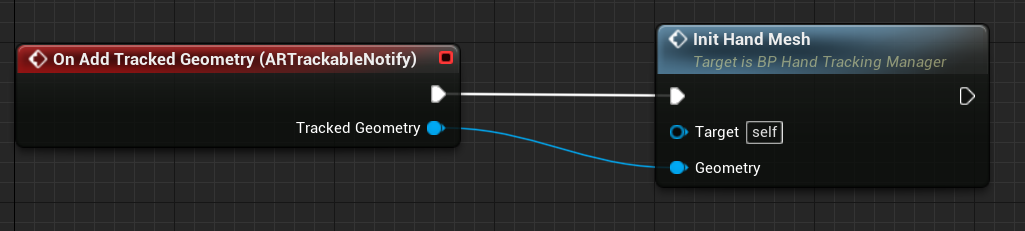

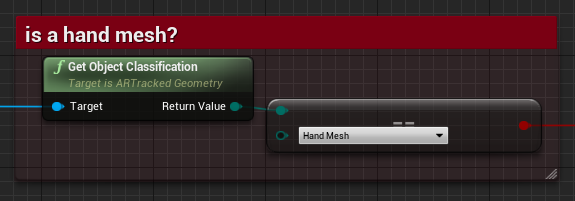

BP_SpacesHandMeshing, located under SnapdragonSpacesSamples Content > SnapdragonSpaces > Samples > HandTracking > Placeable, binds events coming from the AR Trackable Notify component to react to AR trackable geometry changes, in this case to render the hand meshes. Invokes the On Add/Update Tracked Geometry events and, since different types of objects can be registered as UARTrackedGeometry, to verify that the tracked geometry is a hand mesh, the object classification must be EARObjectClassification::HandMesh. If hand tracking is the input mode, BP_SpacesHandMeshing it's spawned in AC_SpacesController_HandTracking and will be used as the hand meshing renderer.

The GetObjectClassification function can be used to check the classification.

In Blueprint nodes, Unreal Engine displays the parameters by reference as return values. It is mandatory to pass both the reference of the actor that represents the hand mesh and the variable representing the number of vertices, otherwise multiple actors will be created.

The Hand mesh Material is adapted to the new Hand Mesh system, this causes a flat shading effect.

To test very simply, the hand rendering can be done using the XRVisualization plugin and connecting the Motion Controller data to the Render Motion Controller function.

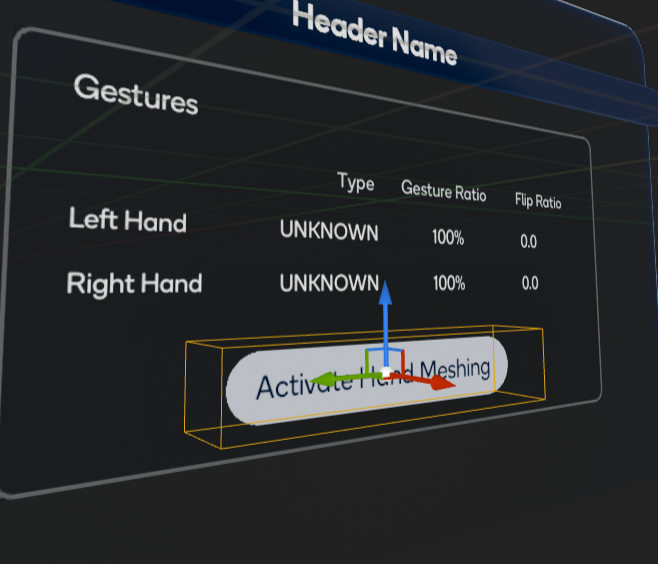

Gesture Status

The direct access to hand gesture data is no longer used in the Hand Tracking Sample from Snapdragon Spaces version 0.15.0 onwards.

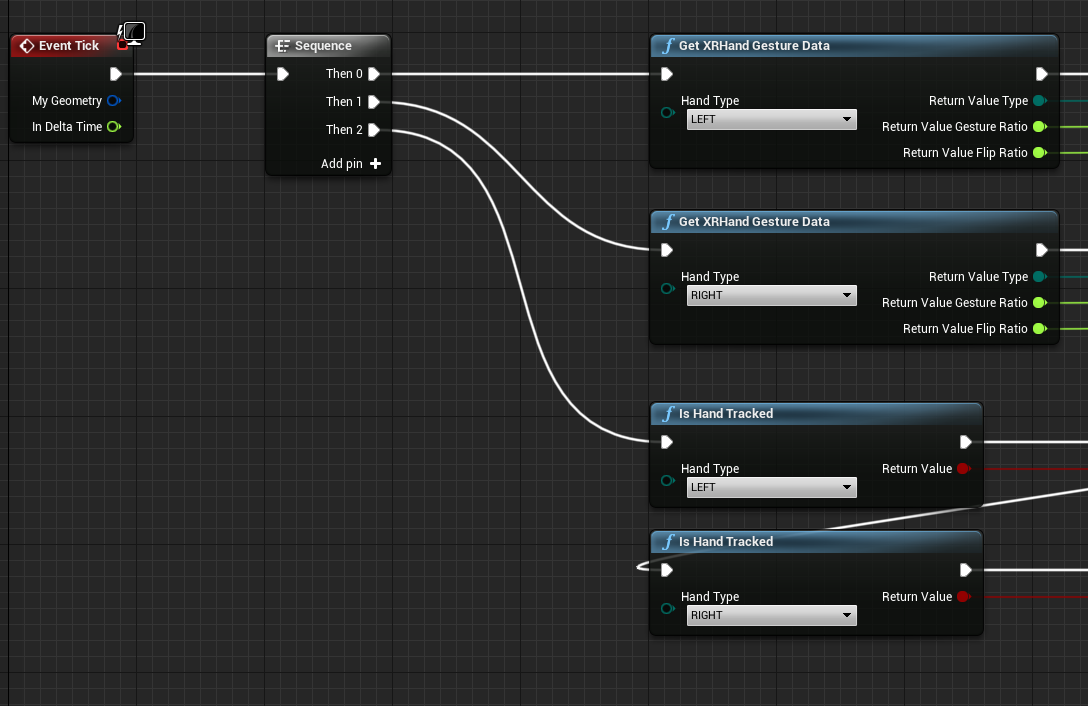

The functions of the HGestures Blueprint Library class are used for the hand gestures recognition. The two hands are being checked in each frame if they are tracked and what gestures are being made. This is done by using the methods Get XRHand Gesture Data and Is Hand Tracked.

The gesture data is composed of the following parameters:

- Type: Enum value that gives which gesture was detected for the hand. It could be one of the gestures among this list :

{ UNKNOWN, OPEN_HAND, GRAB, PINCH, ERROR } - GestureRatio: Float value between 0 and 1, indicating how much the gesture is applied.

- FlipRatio: Float value between -1 and 1, indicating if the hand gesture is detected from the back (-1), from the front (1) or in between.

Here is an example with an interface that shows the gesture type and ratios detected for the left hand.

Hand Tracking Interaction

This section explains the different actors and components needed to use hand tracking interaction.

Hand tracking interaction is not only used in the hand tracking sample, but in all samples of the project. This allows users to interact with widget actors and 3D actors that are interactable with hand tracking.

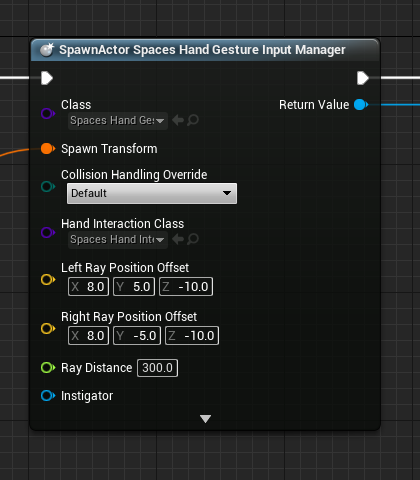

Spaces Hand Gesture Input Manager Actor

To use hand tracking interaction, this actor is the most important one. In order to use hand tracking as the input mode, it's required to add this actor to the level. The use of the Spawn Actor of class node is recommended to spawn this actor.

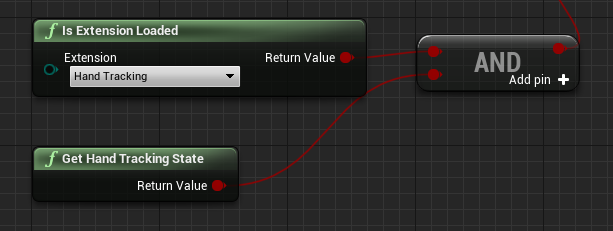

Always check if hand tracking is available before adding or spawning this actor into the level.

The Spaces Hand Gesture Input Manager actor is responsible for listening to hand gestures in real time and reporting the actions based on these hand gestures using delegates. It spawns and owns two actors of class ASpacesHandInteraction, one for each hand, which are needed to perform the interactions. The following sections list different variables and functions that are available to access and use in this class.

Variables

FOnSpacesHandPinch OnSpacesHandPinchLeftandFOnSpacesHandPinch OnSpacesHandPinchRight: Delegates triggered to inform about the Pinch gesture state.FOnSpacesHandOpen OnSpacesHandOpenLeftandFOnSpacesHandOpen OnSpacesHandOpenRight: Delegates triggered to inform about the Open Hand gesture state.FOnSpacesHandGrab OnSpacesHandGrabLeftandFOnSpacesHandGrab OnSpacesHandGrabRight: Delegates triggered to inform about the Grab gesture state.FOnSpacesHandInteractionStatusUpdated OnSpacesHandInteractionStatusUpdated: Delegate triggered when the hand interaction state is updated, it informs about the status with a boolean value.TSubclassOf < ASpacesHandInteraction > HandInteractionClass: Subclass ofSpaces Hand Interaction Actorto spawn as Hand Interaction actors.FVector LeftRayPositionOffsetandFVector RightRayPositionOffset: Ray offset of both Hand Interaction actors.float RayDistance: Ray distance of both Hand Interaction actors.

Functions

ASpacesHandInteraction* GetHandLeftInteraction() constandASpacesHandInteraction* GetHandRightInteraction() const: Used to get the Hand Interaction actors.void SetHandInteractionState(bool active): Used to enable or disable hand interaction, this function will affect all the hand interaction system. Hand interaction is disabled by default.void GetHandInteractionState() const: Returns the status of the hand interaction system.

See AC_SpacesController_HandTracking (located under SnapdragonSpacesSamples Content > SnapdragonSpaces > Common > Core > Components) to see how the Spaces Hand Gesture Input Manager actor is used.

Spaces Hand Interaction Actor

The Spaces Hand Interaction actor is responsible for the interaction with different hand interactable elements present in a level, such as 3D Widgets and other type of actors. The Spaces Hand Interaction actor includes distal hand interactions, performing ray casting over the scene elements, and proximal hand interactions, which will be activated when touching an interactable actor with the hands. The Spaces Hand Interaction actor will be represented with a ray that will start in the user specified hand. This ray will always be visible except when using proximal interaction. The ray has two interaction modes: active (when ray casting over any hand interactable actor in the scene), or not active. The color and length of the ray will change depending on these two modes. To interact with UI, use distal interaction and the pinch gesture.

In the Snapdragon Spaces Sample project for Unreal Engine, the Hand Gesture Input Manager is spawning two actors of Spaces Hand Interaction type, one for each hand, meaning that adding the Hand Gesture Input Manager to the scene is enough.

The Spaces Hand Interaction actor can be customized using the following variables and functions.

Variables

EControllerHand HandType: Indicates if the hand is right or left.FVector RayPositionOffset: Regulates the position of the ray, it is an offset from the shoulder location.float RayDistance: Ray casting distance in centimeters.float SnappingDistanceTolerance: Distance to stop the snapping effect over aSpaces Snapping Volumecomponent.float DistalRayThresholdDistance: Distance to the focused interactable actor that will determine if the ray is visible or not, changing between proximal and distal interaction modes.float RayRepresentationLength: Ray length when using distal interaction and no hitting any interactable actor.UStaticMesh* RayMesh: Mesh that will represent visually the hand ray, the mesh will be used for an Unreal Engine Spline Mesh Component.UMaterialInterface* RayMaterial: Material of the ray mesh which has to be compatible with Unreal Engine Spline Mesh Component.FLinearColor HitRayColor: Ray color when hitting an interactable object.FLinearColor NoHitRayColor: Ray color when not hitting an interactable object.TSubclassOf<ASpacesHandJointCollider> HandJointColliderActorClass: Subclass of theASpacesHandJointColliderto use for proximal interaction. It is recommended to use as subclass theASpacesHandJointColliderBlueprint located in the plugin folder SnapdragonSpaces > Content > HandTracking > Actors.

- For Unreal Engine Spline Mesh Component, it is recommended to use meshes with enough geometry in the middle to be bended in case that there are enough spline points to create the bend effect.

- To make a material compatible with Spline Mesh Components, make sure that the option

Used with Spline Meshesis set to true inside the material (find the option inDetails > Usage).

Functions

FTransform GetGrabPointTransform() const: Returns the grab point transform. For distal interaction will return the ray end point and for proximal interaction will return the hand transform.FTransform GetOffsetTransform() const: Returns the offset transform between the interactable actor and the grab transform.void SetHandInteractionState(bool active): Enable or disable the interaction state of this actor.

See BP_SpacesHandInteraction (located under SnapdragonSpaces > Content > HandTracking > Actors) to see how the to customize this actor. It is also possible to create a blueprint actor based on this class and use it as hand interaction actor.

Spaces Hand Interactable Component

Adding this component to an actor will make the actor compatible with hand interaction. The pinch and grab gestures can be used to grab the actor with this component. With distal interaction only translation is available, with proximal interaction translation, rotation and scale are available, being the last one an action that has to be performed with two hands. It is possible to regulate the following variables to customize its behavior.

Variables

float LerpFactor: Regulates the translation and rotation speed of the actor.float LerpFactor: Regulates the scale speed of the actor.float MinimumScaleFactor: Minimum scale factor that can be applied to the actor.float MaximumScaleFactor: Maximum scale factor that can be applied to the actor.bool bApplyTranslation: Indicates whether or not to translate the actor.bool bApplyRotation: Indicates whether or not to rotate the actor.bool bApplyScale: Indicates whether or not to scale the actor.FOnSpacesHandInteractableStateChanged OnSpacesHandInteractableStateChanged: Delegate/Dispatcher that communicates the status of the hand interaction over the actor, returning the state using aESpacesHandInteractableStateenum (see how this enum works in the following code sample).

UENUM(BlueprintType, Category = "Snapdragon Spaces Hand Interaction")

enum class ESpacesHandInteractableState : uint8

{

Focused = 0,

Grabbed = 1,

UnFocused = 2

};

An example of the use of this component is in the BP_SpacesHandInteractable_Cube blueprint actor, located under the plugin folder SnapdragonSpacesSamples Content > SnapdragonSpaces > Samples > HandTracking > Placeable.

Spaces Snapping Volume Component

The Spaces Snapping Volume component inherits from Unreal Engine Box Component. It is mainly used to snap the ray end of the SpacesHandInteraction actor into a desired location within another actor. This is especially useful for interactable 3D Widget components, such as buttons, checkboxes or sliders, but it can be used for any type of 3D actor. The component can be added inside of an actor and has to be manually placed at the desired position, for example, on top of a button in a 3D widget, as shown in the following image.

It is very important when using this component with UI to follow the following guidelines:

- The location and size of the box has to match the form of the 3D widget component, otherwise there may be unwanted effects such as flickering when ray casting outside of the 3D widget actor.

- The direction of the X axis of the component has to match the direction of the 3D widget component. This is needed to properly interact with the widget component.

To customize the behavior of this component, there are different variables and functions that can be used.

Variables

bool bSnap: Determines if the component will be used for snapping or not. A possible case to use this component without enabling snapping is a 3D widget slider. In that case it is not possible to snap the end of the ray to the slider handle, but the visualization of the hand ray is needed for user experience.bool bIsUI: It is mandatory to enable this option when the component is used for 3D Widget UI components interaction. It can be disabled for the use in any other actor.bool bIsDisabled: Determines if the component collision is active from start or not. It will be false by default.

Functions

void SetCollisionDisabledState(bool disabled): Used to set the value ofbIsDisabled.void UpdateCollisionStatus(bool active): Updates the collision status of the component. IfbIsDisabledis true, the collision will be disabled regardless of the input value of the function.

Spaces Distal Interaction Box Actor

BP_SpacesDistalInteractionBox allows for rotation and scaling of an actor using distal interaction. In order to use it, it must be added as a child actor component inside the actor to manipulate. Find the BP_SpacesDistalInteractionBox under SnapdragonSpaces Content > Hand Tracking > Actors. To see an example of how the BP_SpacesDistalInteractionBox is added to another actor, see the BP_SpacesHandInteractable_Panda actor, located under SnapdragonSpacesSamples Content > Snapdragon Spaces > Samples > HandTracking > Placeable. The distal interaction box is composed of different instances of Spaces Distal Manipulator Actor which each one can be called as "manipulation point". There are two different kinds of Spaces Distal Manipulator Actor, which are Spaces Distal Scale Point and Spaces Distal Rotation Point. The manipulation points will have different behaviors depending on which part in the box are. The manipulation points located at the edges of the box are used for changing the scale of the actor, the rest of the manipulation points will perform different rotations and depending on the position on the box they will rotate the actor in the X, Y, or Z plane. Please use the pinch gesture to interact with the interaction box.