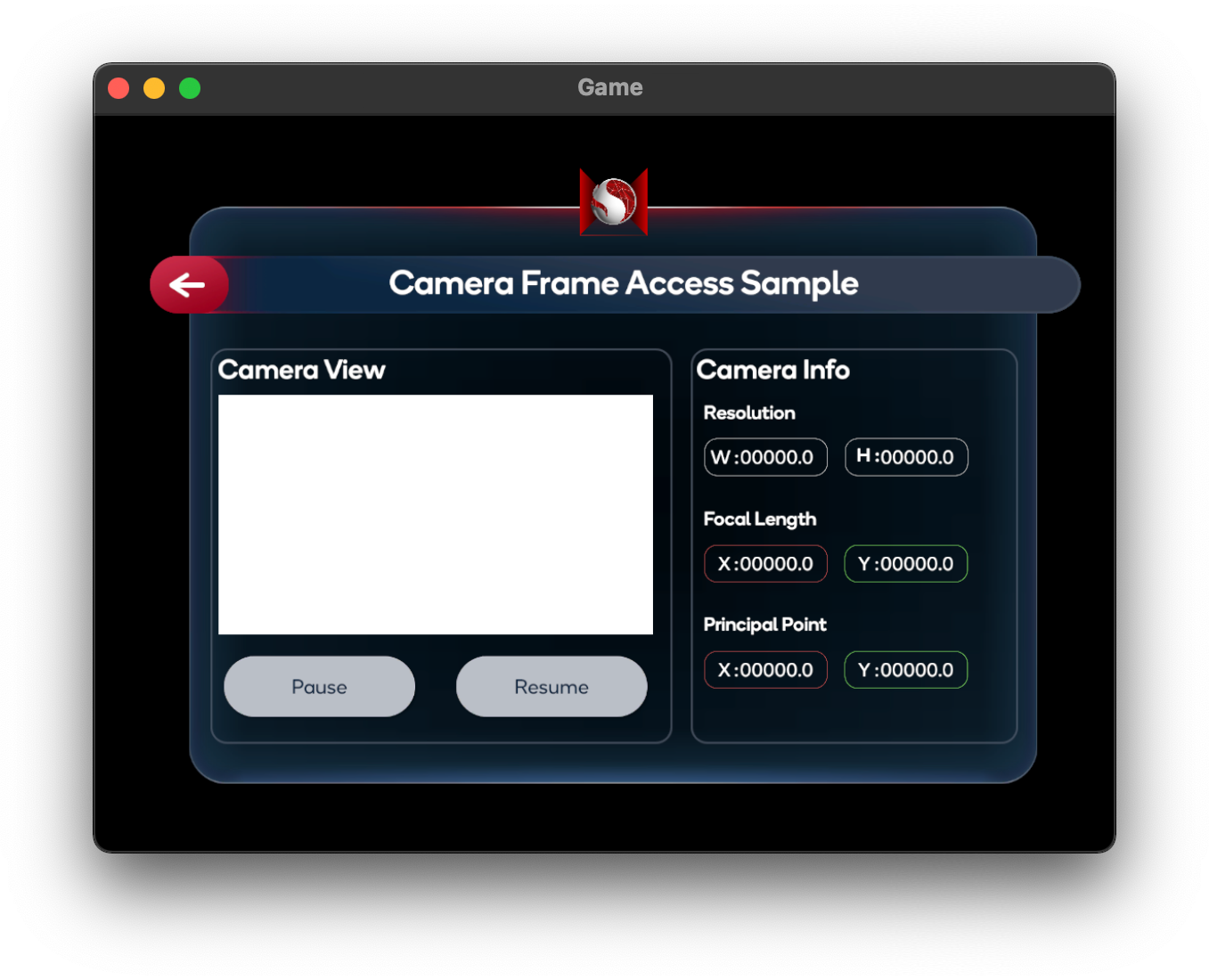

Camera Frame Access Sample

This sample demonstrates how to retrieve RGB camera frames and intrinsic properties for image processing.

For basic information about camera frame access and what AR Foundation's AR Camera Manager component does, please refer to the Unity documentation and the AR Camera Manager API reference.

In order to use this feature it has to be enabled in the OpenXR plugin settings located under Project Settings > XR Plug-in Management > OpenXR (> Android Tab).

How the sample works

Adding the AR Camera Manager component to the XR Origin > Main Camera GameObject will enable the camera access subsystem. Upon starting, the subsystem will retrieve valid sensor configurations from the viewer device. If a valid Y'UV420sp`` or YUY2` sensor configuration is found, the subsystem will select this configuration as the provider for CPU camera images. Conceptually, an AR Camera Manager represents a single camera and will not manage multiple sensors at the same time.

The sample scene consists of two panels:

- A camera feed panel displaying the latest CPU image from the device camera, with Pause and Resume buttons

- A camera info panel enumerating the various properties of the device camera