Camera Frame Access

How to Enable the Feature

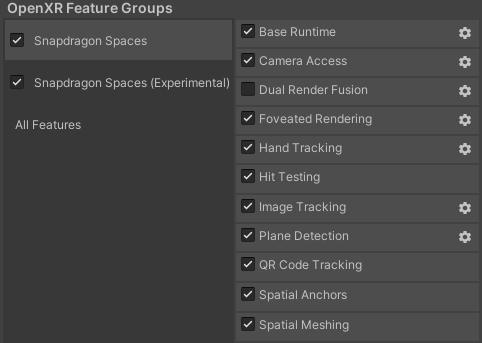

In order to use Camera Frame Access, it has to be enabled in the OpenXR plugin settings located under Project Settings > XR Plug-in Management > OpenXR (> Android Tab) > OpenXR Feature Groups > Snapdragon Spaces > Camera Access.

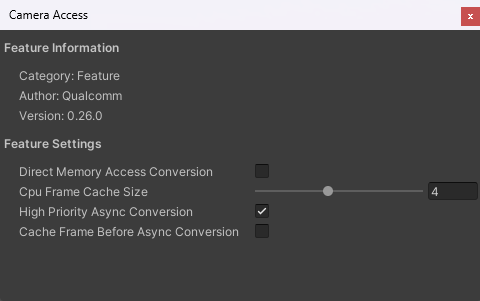

Feature Settings

Camera Frame Access has 4 feature settings:

- Direct Memory Access Conversion: Used by the application to decide how to access camera memory before CPU frame conversion. May improve performance in some devices, while degrading performance in others.

- CPU Frame Cache Size: Size of the XRCpuImage.ConvertAsync frame cache, defining how many asynchronous conversion requests can be queued. When the limit is reached, older requests will expire.

- High Priority Async Conversion: Used by the application to decide whether to treat the conversion thread as a high-priority thread.

- Cache Frame Before Async Conversion: Guarantees async conversions by caching the frames upon request without relying on the CPU Frame Cache, with a slight performance and memory penalty. Ignored if Direct Memory Access Conversion is enabled.

Retrieving CPU images

Each time a new camera frame is available to the subsystem, the AR Camera Manager will fire a frameReceived event. Subscribing to this event allows other scripts to retrieve the latest camera frame at the earliest time possible and perform operations on it. Once a camera frame is available, the camera manager's TryAcquireLatestCpuImage function will return an XRCpuImage object which represents a single, raw image from the selected device camera. The raw pixel data of this image can be extracted with XRCpuImage's Convert function, which returns a NativeArray<byte>. Snapdragon Spaces also supports asynchronous conversion of frames through XRCpuImage.ConvertAsync, either by polling the returned AsyncConversion object and getting the data with AsyncConversion.GetData<byte>, or providing a callback with a NativeArray<byte> argument to be executed when conversion is completed.

An AR Camera Manager component is automatically added to the scene hierarchy when creating an XR Origin. Camera frames are requested from the OpenXR Runtime application while an AR Camera Manager component is enabled. Consider disabling this component if you do not require Camera Frame Access in your scene.

An AR Camera Background component is automatically added to the scene hierarchy when creating an XR Origin. This component must be disabled to avoid rendering issues mentioned in the known issues section.

XRCpuImage objects must be explicitly disposed of after conversion. To do this, use XRCpuImage's Dispose function. Failing to dispose of XRCpuImage objects may leak memory until the camera access subsystem is destroyed.

If allocating a NativeArray<byte> before conversion, this buffer must also be disposed of after copy or manipulation. To do this, use NativeArray<T>'s Dispose function. Failing to dispose of NativeArray<byte> will leak memory until the camera access subsystem is destroyed.

AsyncConversion objects must be explicitly disposed of after the data has been used. To do this, use AsyncConversion's Dispose function. Failing to dispose of AsyncConversion objects will leak memory until the camera access subsystem is destroyed.

When using XRCpuImage.ConvertAsync and providing a callback function, it is not necessary to dispose of the provided NativeArray<byte> data buffer. Conversion data will be disposed by the camera subsystem when the callback finishes.

For detailed information about how to use frameReceived, TryAcquireLatestCpuImage, XRCpuImage.Convert and XRCpuImage.ConvertAsync, please refer to the Unity documentation.

Camera Frame Access may need a few seconds to initialize depending on the used device. Do not try to access frames before the subsystem has initialized successfully. It is highly recommended to use the frameReceived event from the AR Foundation API to avoid errors.

The sample code below requests a CPU image from the AR Camera Manager when the frameReceived event is fired. If successful, it extracts the XRCpuImage's raw pixel data directly into a managed Texture2D's GetRawTextureData<byte> buffer, applying the texture buffer afterwards with the Apply function. Finally, it updates the texture in the target RawImage, making the new frame visible in the application's UI.

public RawImage CameraRawImage;

private ARCameraManager _cameraManager;

private Texture2D _cameraTexture;

private XRCpuImage _lastCpuImage;

public void Start()

{

_cameraManager.frameReceived += OnFrameReceived;

}

private void OnFrameReceived(ARCameraFrameEventArgs args)

{

_lastCpuImage = new XRCpuImage();

if (!_cameraManager.TryAcquireLatestCpuImage(out _lastCpuImage))

{

return;

}

UpdateCameraTexture(_lastCpuImage);

}

private unsafe void UpdateCameraTexture(XRCpuImage image)

{

var format = TextureFormat.RGBA32;

if (_cameraTexture == null || _cameraTexture.width != image.width || _cameraTexture.height != image.height)

{

_cameraTexture = new Texture2D(image.width, image.height, format, false);

}

var conversionParams = new XRCpuImage.ConversionParams(image, format);

var rawTextureData = _cameraTexture.GetRawTextureData<byte>();

try

{

image.Convert(conversionParams, new IntPtr(rawTextureData.GetUnsafePtr()), rawTextureData.Length);

}

finally

{

image.Dispose();

}

_cameraTexture.Apply();

CameraRawImage.texture = _cameraTexture;

}

The following texture formats are supported by the AR Camera Manager:

RGB24RGBA32BGRA32

Retrieving Camera Frames as Texture2D

This feature is only available for compatible Snapdragon Spaces all-in-one devices.

The sample code below requests a Texture2D image from the ARCameraFrameEventArgs variable when the frameReceived event is fired. If the textures array inside args is not empty, it will mean that there are RGB textures ready for its use. These textures have been converted through the GPU using shaders. This results in a faster conversion from YUV to RGB and therefore, improving the performance.

public RawImage CameraRawImage;

private ARCameraManager _cameraManager;

public void Start()

{

_cameraManager.frameReceived += OnFrameReceived;

}

private void OnFrameReceived(ARCameraFrameEventArgs args)

{

CameraRawImage.texture = args.textures.Count > 0 ? args.textures[0] : null;

}

Retrieving YUV plane data

Snapdragon Spaces currently supports the Y′UV420sp and YUY2 formats. Y'UV420sp consists of a Y buffer followed by interleaved 2x2 subsampled U/V buffer. YUY2 consists of a single buffer interleaving Y and U/V samples in the form of Y-U-Y-V 'macropixels', each representing 2 horizontal pixels with equal chrominance, with no vertical subsampling. For detailed information about the YUV color model, please refer to the YUV section of the YCbCr Wikipedia article.

If RGB conversion is not needed, the raw YUV plane data can be retrieved through XRCpuImage's GetPlane function. This returns an XRCpuImage.Plane object from which plane data can be read. Formats can be differentiated with XRCpuImage.planeCount, indicating the amount of planes representing the frame.

Y'UV420sp

- Y plane data has index 0 and can be accessed via

GetPlane(0) - UV plane data has index 1 and can be accessed via

GetPlane(1)

YUY2

- YUYV plane data has index 0 and can be accessed via

GetPlane(0)

In case multiple applications are accessing the same sensor simultaneously, they may see a YUY2 frame format where they normally would see a Y'UV420sp frame format. It is recommended to handle both cases in your application. Not doing so might cause issues when using video capture or streaming services.

For detailed information about XRCpuImage.GetPlane, please refer to the Unity documentation.

For detailed information about XRCpuImage.Plane, please refer to the Unity documentation.

The sample code below requests a CPU image from the AR Camera Manager when the frameReceived event is fired. If successful, it retrieves the XRCpuImage's raw plane data to apply different image processing algorithms to it depending on the YUV format.

private ARCameraManager _cameraManager;

private XRCpuImage _lastCpuImage;

public void Start()

{

_cameraManager.frameReceived += OnFrameReceived;

}

private void OnFrameReceived(ARCameraFrameEventArgs args)

{

_lastCpuImage = new XRCpuImage();

if (!_cameraManager.TryAcquireLatestCpuImage(out _lastCpuImage))

{

return;

}

switch(_lastCpuImage.planeCount)

{

case 1:

ProcessYuy2Image(_lastCpuImage);

break;

case 2:

ProcessYuv420Image(_lastCpuImage);

break;

}

}

private void ProcessYuv420Image(XRCpuImage image)

{

var yPlane = image.GetPlane(0);

var uvPlane = image.GetPlane(1);

for (int row = 0; row < image.height; row++)

{

for (int col = 0; col < image.width; col++)

{

// Perform image processing here...

}

}

}

Retrieving Sensor Intrinsics

The camera manager's TryGetIntrinsics function will return an XRCameraIntrinsics object which describes the physical characteristics of the selected sensor. For detailed information about XRCameraIntrinsics, please refer to the Unity documentation.

The sample code below retrieves the intrinsics of the selected sensor and displays it in the application UI.

public Text[] ResolutionTexts;

public Text[] FocalLengthTexts;

public Text[] PrincipalPointTexts;

private ARCameraManager _cameraManager;

private XRCameraIntrinsics _intrinsics;

private void UpdateCameraIntrinsics()

{

if (!_cameraManager.TryGetIntrinsics(out _intrinsics))

{

Debug.LogWarning("Failed to acquire camera intrinsics.");

return;

}

ResolutionTexts[0].text = _intrinsics.resolution.x.ToString();

ResolutionTexts[1].text = _intrinsics.resolution.y.ToString();

FocalLengthTexts[0].text = _intrinsics.focalLength.x.ToString("#0.00");

FocalLengthTexts[1].text = _intrinsics.focalLength.y.ToString("#0.00");

PrincipalPointTexts[0].text = _intrinsics.principalPoint.x.ToString("#0.00");

PrincipalPointTexts[1].text = _intrinsics.principalPoint.y.ToString("#0.00");

}

Retrieving Sensor Extrinsics

The AR Foundation API does not expose sensor extrinsics. Instead, two methods are provided to get the sensor extrinsics.

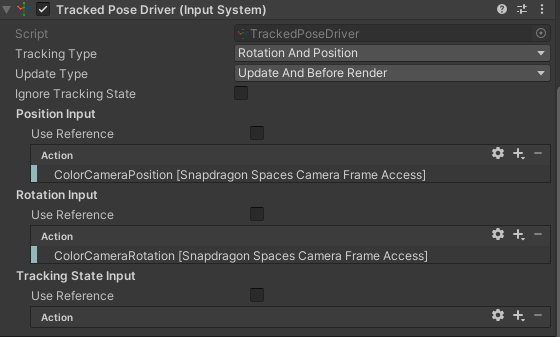

Method 1: Snapdragon Spaces Input Bindings

Snapdragon Spaces provides input actions for ColorCameraPosition and ColorCameraRotation. These input actions can be used with a Tracked Pose Driver (Input System) by binding them with the Position Input and Rotation Input. This Tracked Pose Driver (Input System) can be added to a GameObject and the pose of this GameObject will match the color camera extrinsics pose.

As the Tracked Pose Driver (Input System) component relies on the update timing of the underlying Input System, the transform of the associated GameObject might be outdated by one frame when requested.

If minimal latency is required or if your system has low error tolerance, position and rotation can instead be retrieved with TrackedInputDriver.positionAction.ReadValue<Vector3>() and TrackedInputDriver.rotationAction.ReadValue<Quaternion>() respectively.

The following image shows how to use these input bindings.

Method 2: Snapdragon Spaces Camera Pose Provider

Add the Spaces Camera Pose Provider component to the scene and use the GetPoseFromProvider function to retrieve the camera pose associated with the AR Camera Manager's latest frameReceived event. The provided pose is relative to the XR Origin's coordinate system.

The sample code below retrieves the extrinsics of the selected sensor and displays it in the application UI.

public SpacesCameraPoseProvider PoseProvider;

public Text[] ExtrinsicPositionTexts;

public Text[] ExtrinsicOrientationTexts;

private Pose _extrinsics;

private void UpdateCameraExtrinsics()

{

if (PoseProvider.GetPoseFromProvider(out _extrinsics) == PoseDataFlags.NoData)

{

Debug.LogWarning("Failed to acquire camera extrinsics.");

return;

}

var position = _extrinsics.position;

var orientation = _extrinsics.rotation.eulerAngles;

ExtrinsicPositionTexts[0].text = position.x.ToString();

ExtrinsicPositionTexts[1].text = position.y.ToString();

ExtrinsicPositionTexts[2].text = position.z.ToString();

ExtrinsicOrientationTexts[0].text = orientation.x.ToString();

ExtrinsicOrientationTexts[1].text = orientation.y.ToString();

ExtrinsicOrientationTexts[2].text = orientation.z.ToString();

}

Spaces Camera Pose Provider can also be used as a pose provider for a Tracked Pose Driver.

Tips to Increase Performance

Camera Access operations can be computationally expensive, whether due to a large image resolution or the nature of the algorithms used.

- When converting the image with

XRCpuImage.Convert, provideXRCpuImage.ConversionParamswith smalleroutputDimensions. - When processing the image with

XRCpuImage.GetPlane, consider subsampling the data buffers with a common factor. - Consider processing the image with

XRCpuImage.ConvertAsync, which avoids blocking the main application thread during conversion.

Snapdragon Spaces offers a Direct Memory Access Conversion setting, which changes the way XRCpuImage.Convert and XRCpuImage.ConvertAsync read and write data. By default, Snapdragon Spaces moves frame data using Marshal.Copy. Enabling this setting allows Spaces to use NativeArray<byte> direct representations of the source and target buffers instead. This setting can be found in Project Settings > XR Plug-in Management > OpenXR (> Android Tab) > Snapdragon Spaces > Camera Frame Access > Direct Memory Access Conversion. Enabling this setting may improve performance on certain devices, though it may negatively impact performance on other architectures. Below is a table indicating which devices the Direct Memory Access Conversion setting is recommended for:

| Device | Recommendation |

|---|---|

| Lenovo ThinkReality A3 | Improves performance, recommended |

| Lenovo ThinkReality VRX | Lowers performance, not recommended |

Retrieving Additional Sensor Data

The Spaces AR Camera Manager Config exposes the number of camera sensors in the device using the GetActiveCameraCount method. This information could be relevant for differentiating between dual devices and VR devices, and for appropriately handling the camera image.