Interaction Gestures

Main interaction gestures

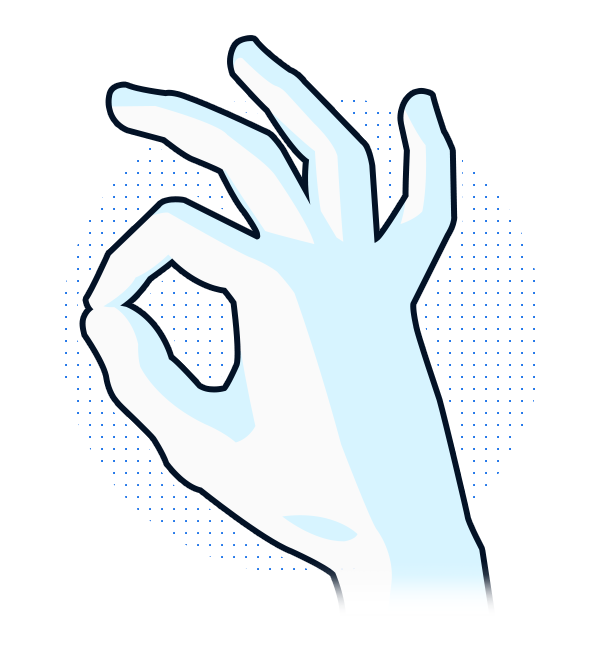

Pinch

The pinch gesture is performed by touching the tip of the thumb to the tip of the index finger while extending the remaining fingers. The pinch is best recognized if the hand is viewed from the side.

The pinch is used for selection when the fingers touch, or for manipulation by holding the gesture and moving.

Pinch gesture is an efficient immersive gesture for validation, because it leads to auto-haptic feedback.

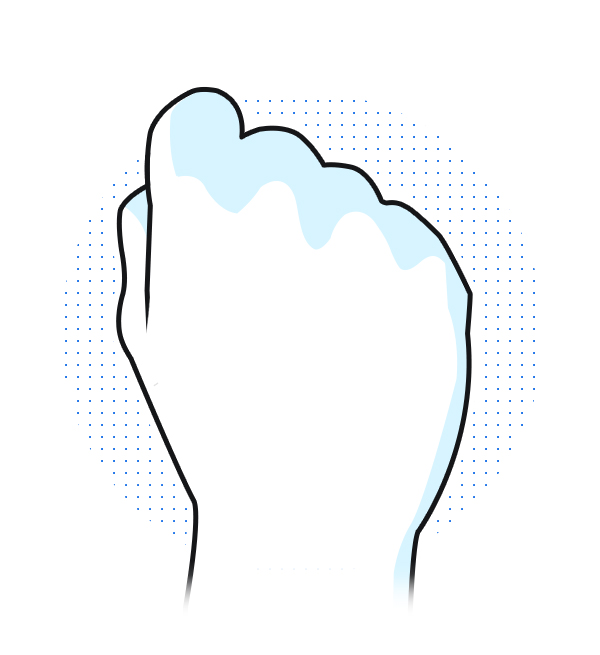

Grab

The grab gesture is performed by placing the hand in front of the camera and closing the fist.

This gesture is used to grab and manipulate proximal voluminous objects.

Grab gesture also allows to have an auto-haptic feedback.

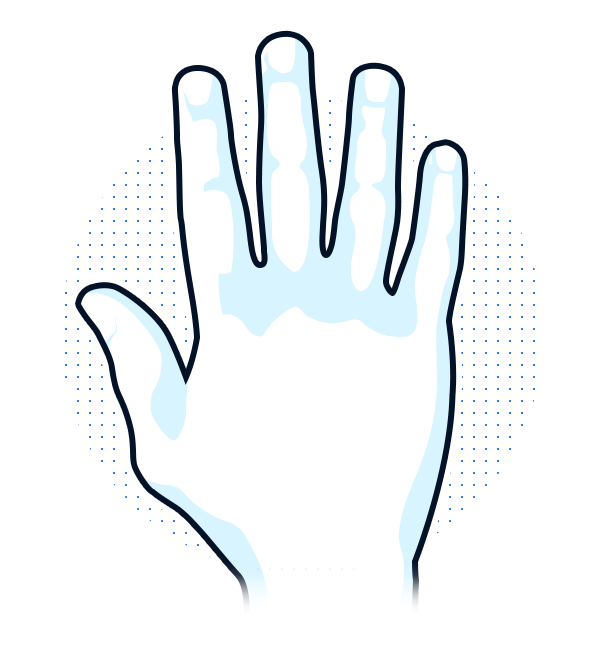

Open-Hand

The Open-Hand gesture is a neutral position. It is performed by extending the hand with the fingers opened and the palm away from the camera.

Open-Hand is generally used to attach the ray cast in order to interact with distal element.

This gesture is used to display raycast or as a release gesture.

Hand representation

Augmented Reality

In an AR context, it is not recommended to display a virtual hand on top of the real one. It is recommended to focus on feedback on the virtual elements, rather than on the hand avatar.

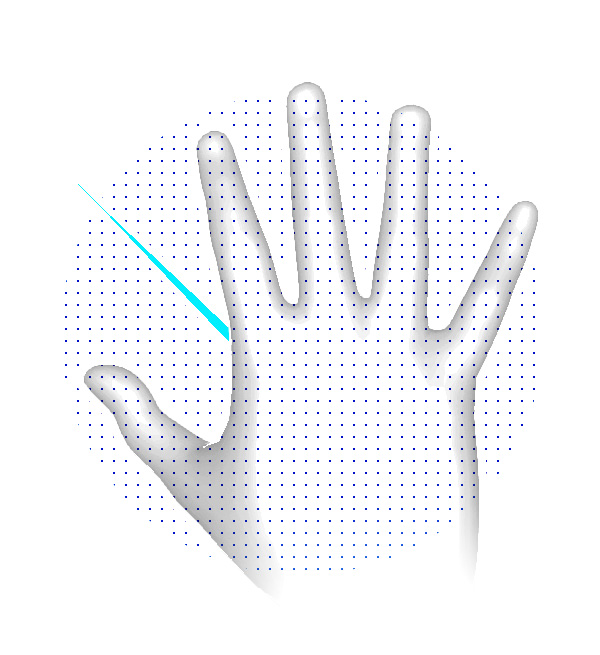

Virtual Reality

3D models leveraging inverse kinematics are best used for VR applications. A 3D representation of the hand is overlaid over the hand in digital space, creating a more immersive experience for users. It is important to adapt the hand’s representation according to the context of the demo.

In addition, the 3D hand must return visual effects to warn the user that he/she is interacting.

Here are 2 examples of 3D hand avatar:

Alpha hand

Harlequin hand

Feedback, cues and affordances

Because hands don’t provide tactile feedback the way other input devices do, it’s essential to compensate for it, through visual and audio feedback when users interact with 3D objects. It’s important to design distinct sounds and visual alterations that confirm when a user interacts with a component.

To improve the user experience, consider including the equivalent of real world feedback into the interaction design. This will help confirm, via a visual or audio cue, that an interaction with an object or a gesture is being, or has been, successfully executed.

Generally, the main states of a virtual element are the following:

| State | Visual Feedback | Audio Feedback |

|---|---|---|

| Idle | None | None |

| Hover | Yes | Yes |

| Selected | Yes | Yes |

Visual feedback

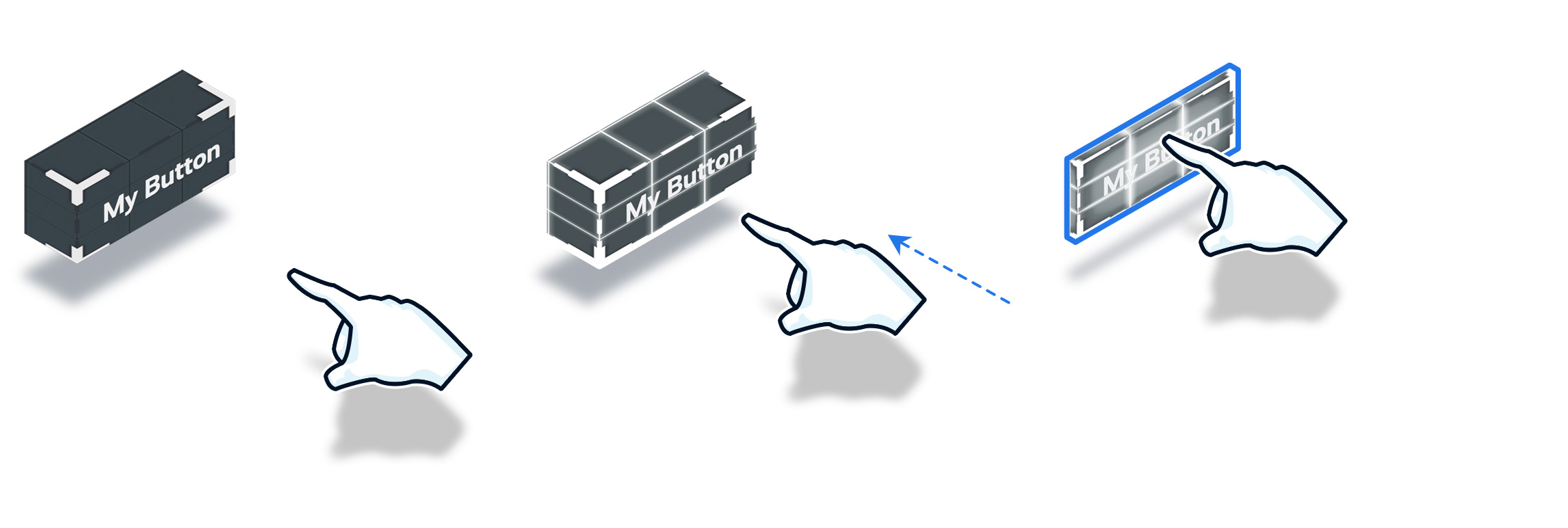

Object

The behavior of an object can change, or the object can be highlighted when successfully engaged. The object could also change shape or size in reaction to an interaction or gesture.

Object states from left to right: idle, hover, selected.

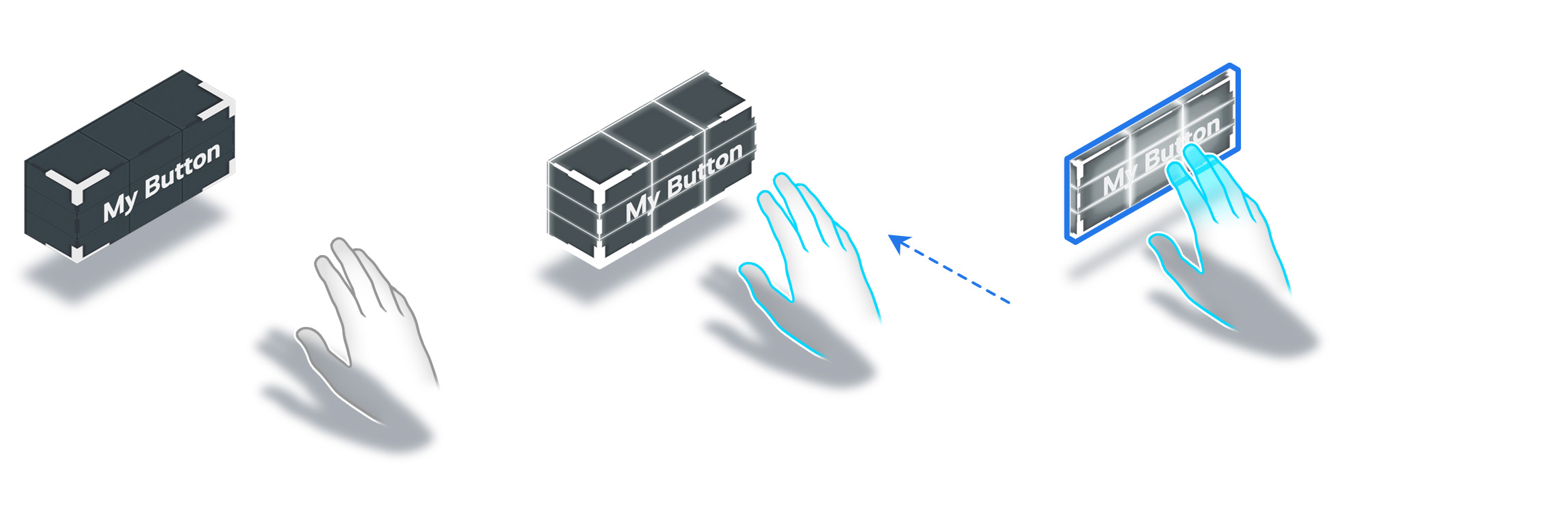

Hand

Depending on the context, it is recommended to give visual feedback on the 3D hand avatar, in addition to the interactive objects.

Generally, the hand goes through three states: no interaction, collision with the interactive element, interacting. Here is a representation of the type of effect the 3D hand can have:

Hand feedback: idle, hover, selected

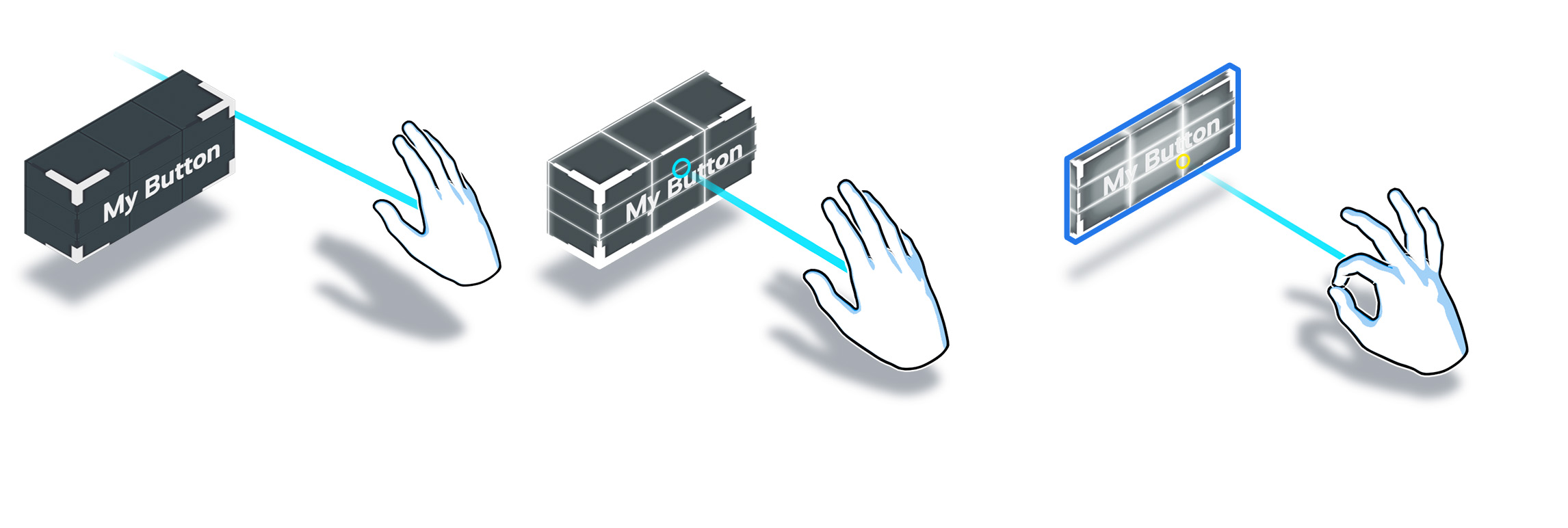

Reticle

To specify the element one wants to interact with, especially in a distal interaction context, it is important to return different types of effects on the pointer. Classically, in HMI interfaces, the pointers' aspect vary according to their interaction state. These behaviors are therefore quite intuitive for the user. It is crucial to think about the different types of behaviors that the ray cast and its reticle can have during the design phase.

Reticle feedback: idle, hover, selected

Audio feedback

It is preferable that audio feedback be implemented on virtual objects only because an interaction sound is context dependent. If a user interacts with a 2D menu, the audio feedback will be different than if he/she interacts in a video game.

Audio can also be used for environmental sounds, still context dependent.

Interaction cue

The interaction cue is a hand animation which is triggered when the user’s hand is not detected. This component guides the user when he/she doesn't know how to interact with the virtual elements.

If the user has not interacted for x seconds (to be defined when designing the demo), the component is animated in a loop until the system detects an interaction.

Cheat Sheet

Gestures and feedback best adapted to interaction states:

| Proximal | Distal | Feedback | |

|---|---|---|---|

| Target | Collision | Open-Hand (raycast) | Visual, Audio |

| Select | Pinch, Grab | Pinch | Visual, Audio |

| Manipulate | Pinch, Grab | Pinch | Visual, depending on context |

| UI Target | Collision | Open-Hand (raycast) | Visual, Audio |

| UI Select | Pinch,Point,Open-Hand | Pinch | Visual, Audio |

| UI Manipulate | Pinch, Point, Open-Hand | Pinch | Visual, Audio |