Extended Hand Tracking Sample

Core Assets

The core assets sample allows to get predefined assets which are shared between projects.

These assets are available in a sample in order to be imported in any project, used as a base and customizable.

The sample contains predefined XR Rigs and Interactors, plus a default input mapping to speed up the QCHTI project's setup.

QCHT Samples

This sample scene demonstrates many interactions (proximal, distal, one hand, two hands) in a demo context, using the Hand Tracking feature. For more information about how Hand Tracking works, please refer to the Hand Tracking Integration section.

Build Samples Scene

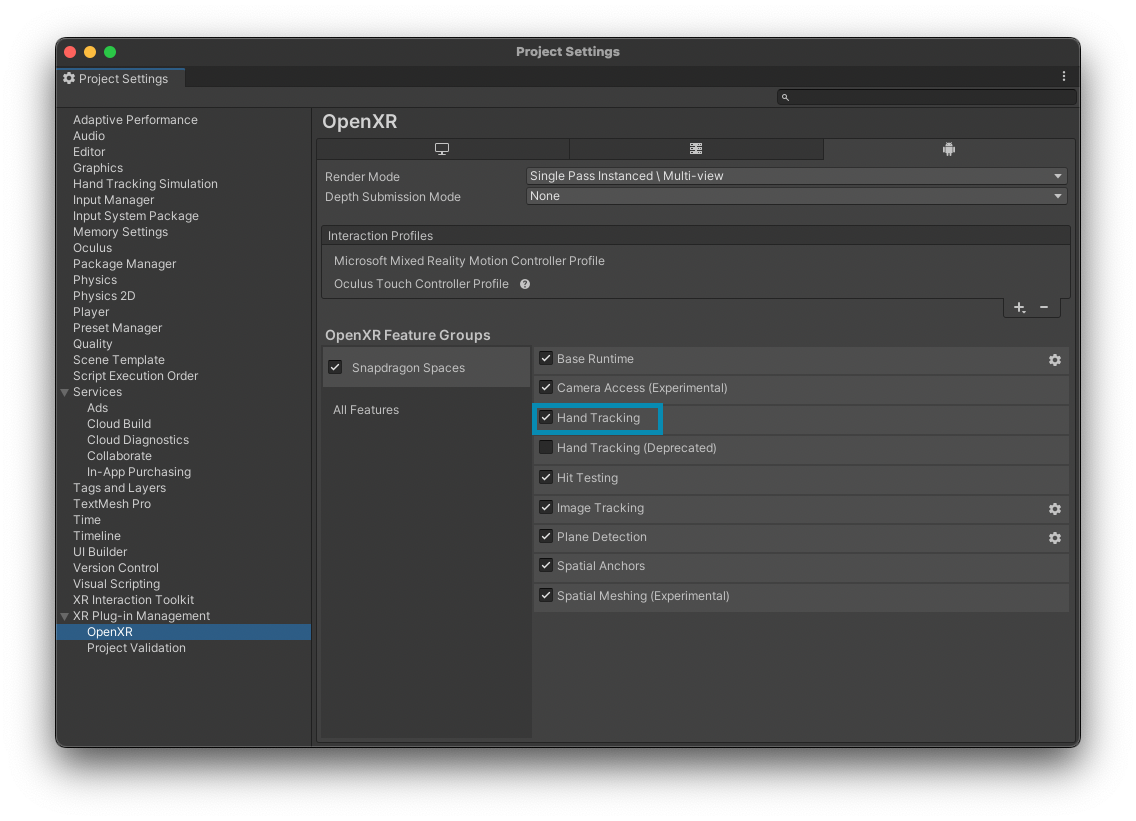

First, make sure to have Hand Tracking enabled in the OpenXR project settings.

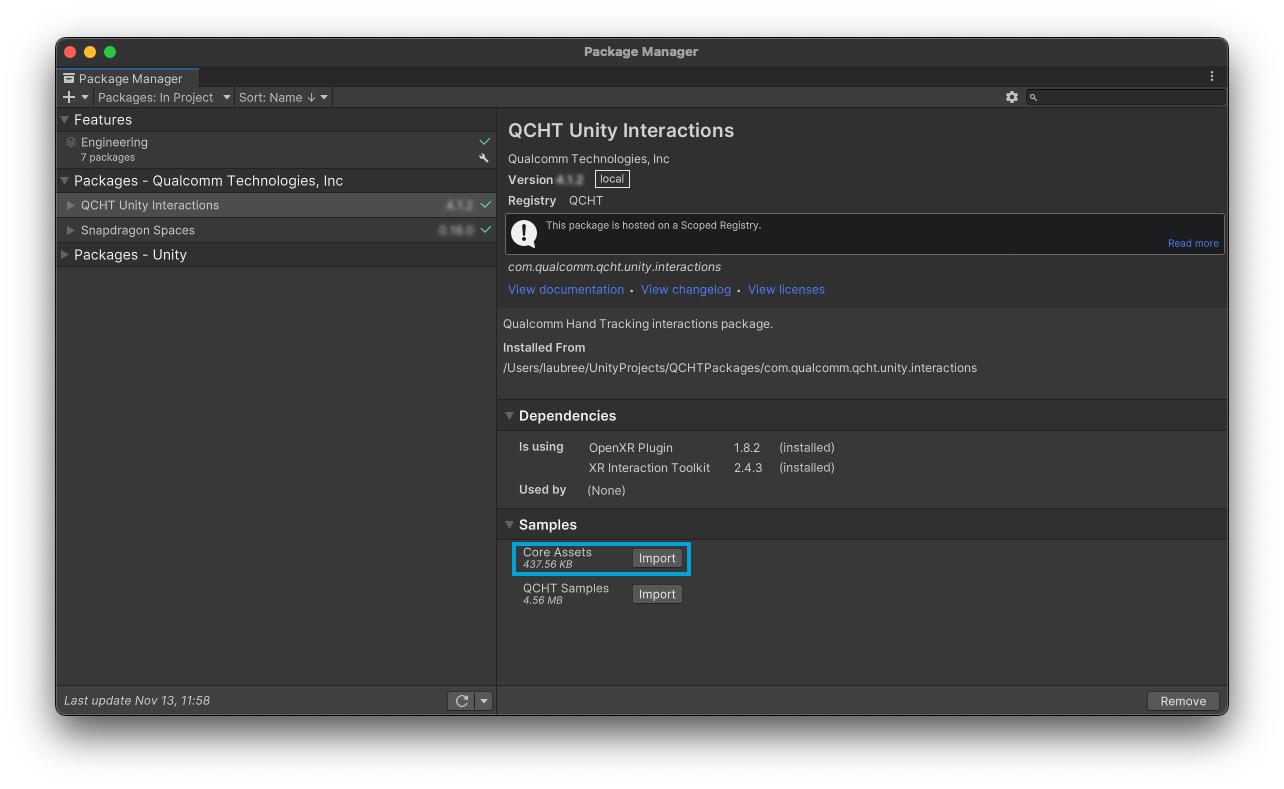

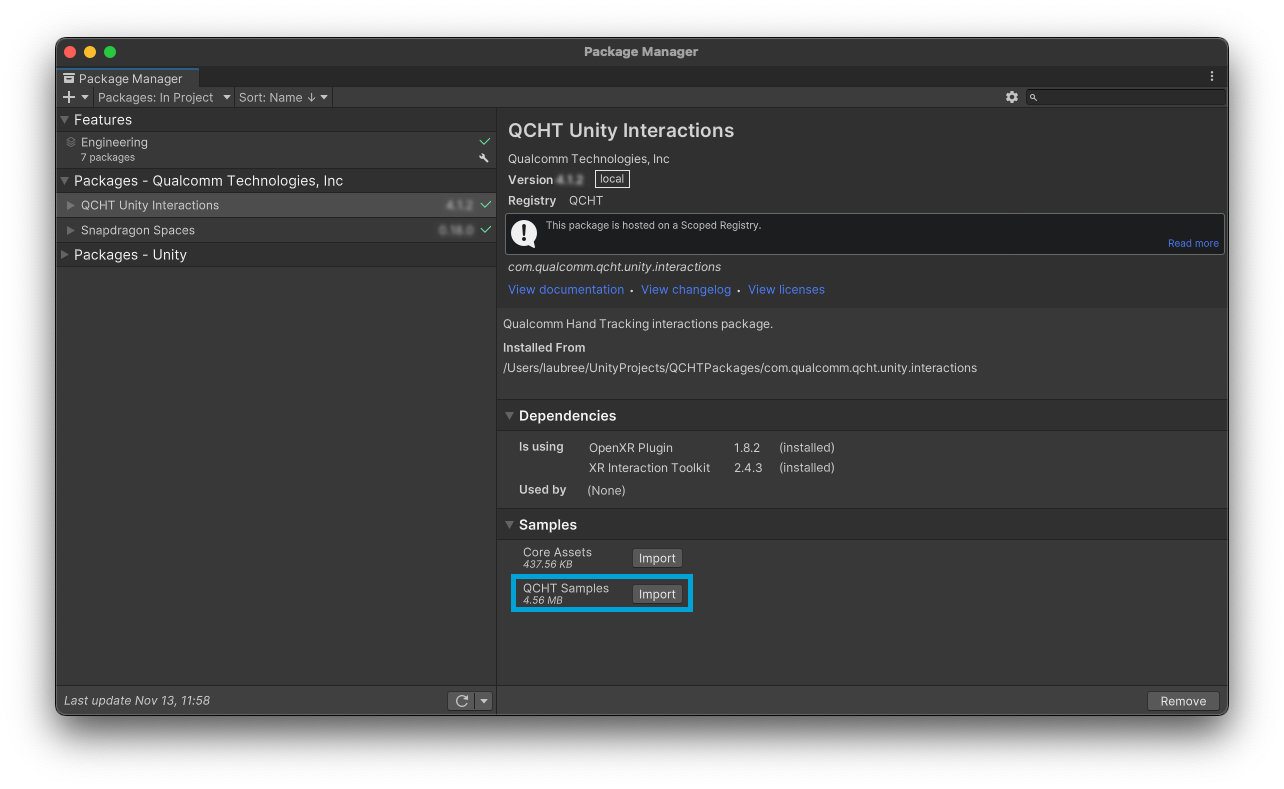

Then, import samples from QCHT Unity Interactions package.

Once it's done, all the scenes can be found in Assets > Samples > QCHT Unity Interactions > [package version number] > QCHT Interactions Samples.

To try those samples in editor mode, open QCHT Sample - Menu scene available in Assets > Samples > QCHT Unity Interactions > [package version number] > QCHT Interactions Samples > Menu > Scenes. To interact in editor simulation mode, please refer to the information in Scene Setup.

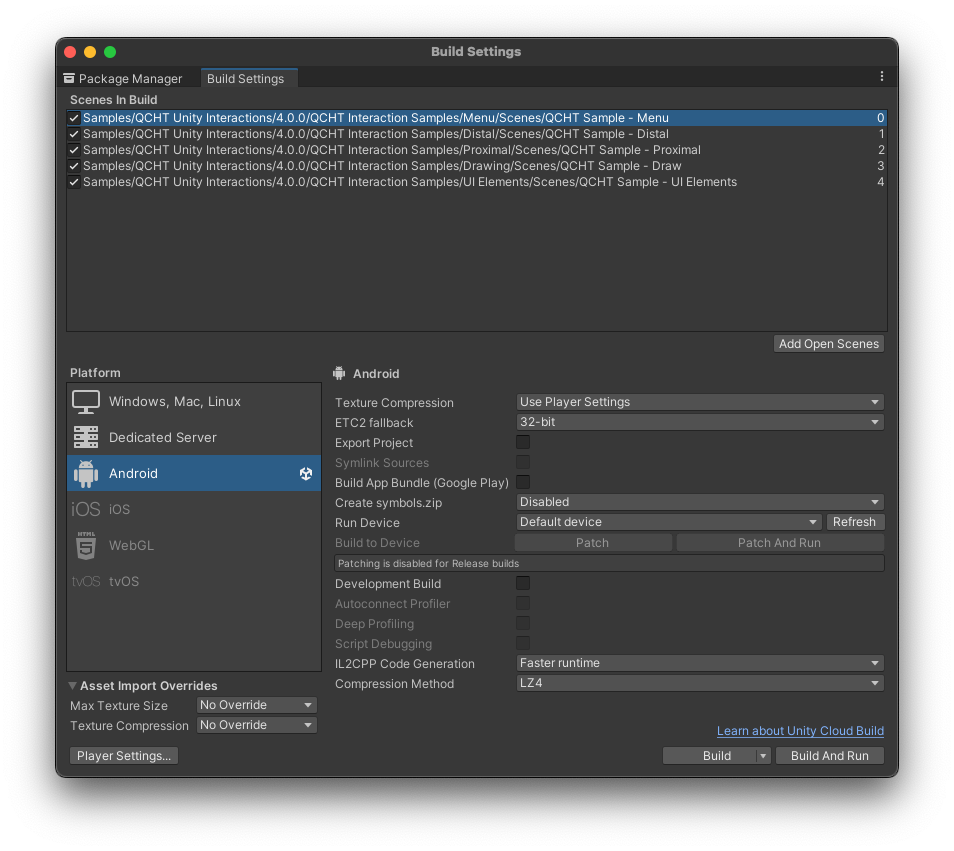

Before testing Hand Tracking samples, it is necessary to add all scenes to the Build Settings.

Sample Description

This sample is split into 4 scenes. The QCHT Sample - Menu main scene which combines all of them.

The main menu allows you to switch between scenes, interactions and hand avatar. Displaying an avatar in an AR context is not recommended in general.

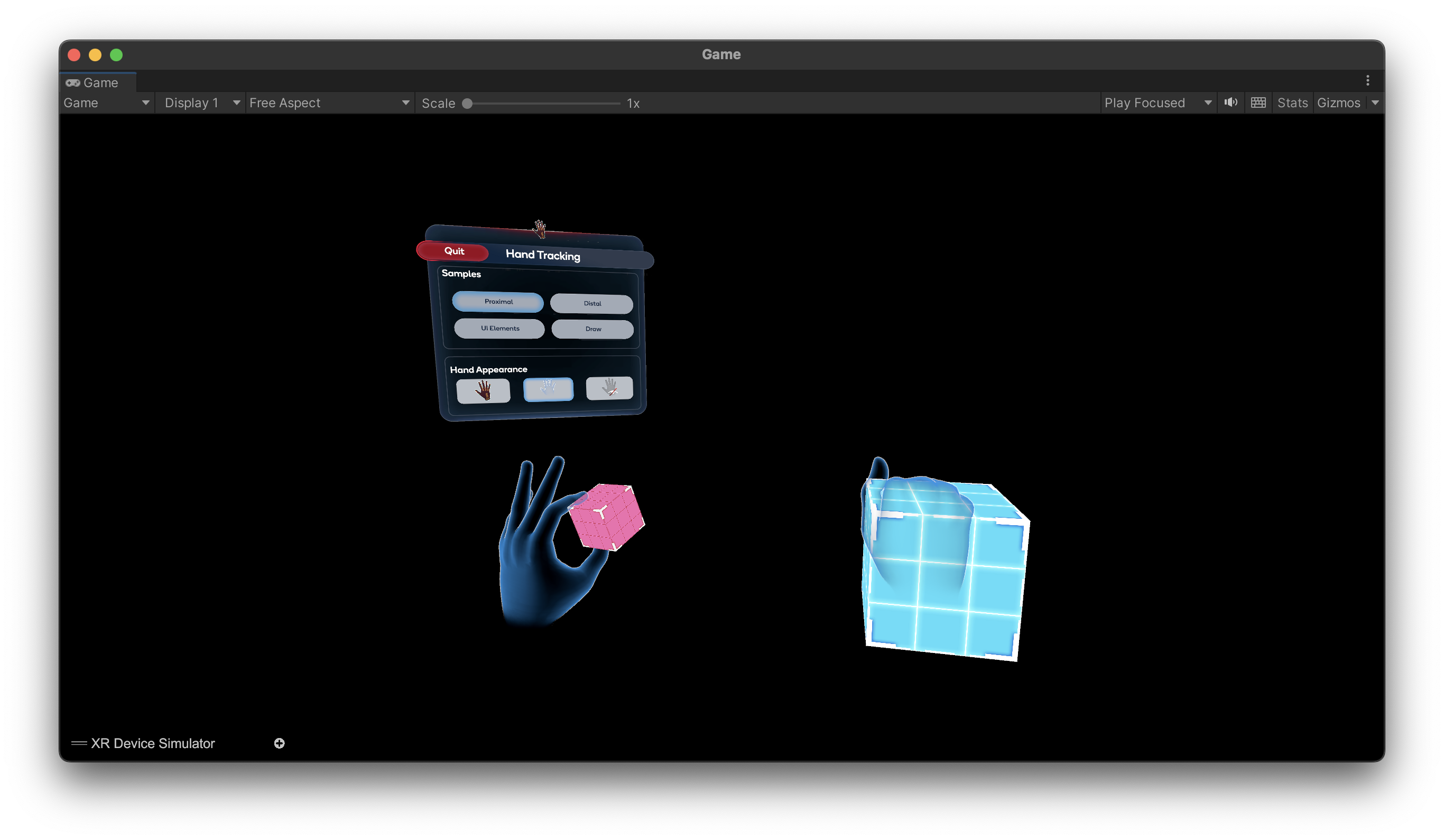

Proximal

Simple Interaction

Interact proximally with a 3D object with snapping (pink cube) or without snapping (blue cube).

Snapping

When the user interacts (by doing a pinch) with the pink cube, the avatar of their hand will snap on it, as preset in the Proximal Snapping Editor.

The snappable object works mostly with the Snap Pose Provider system and the Snap Pose generator.

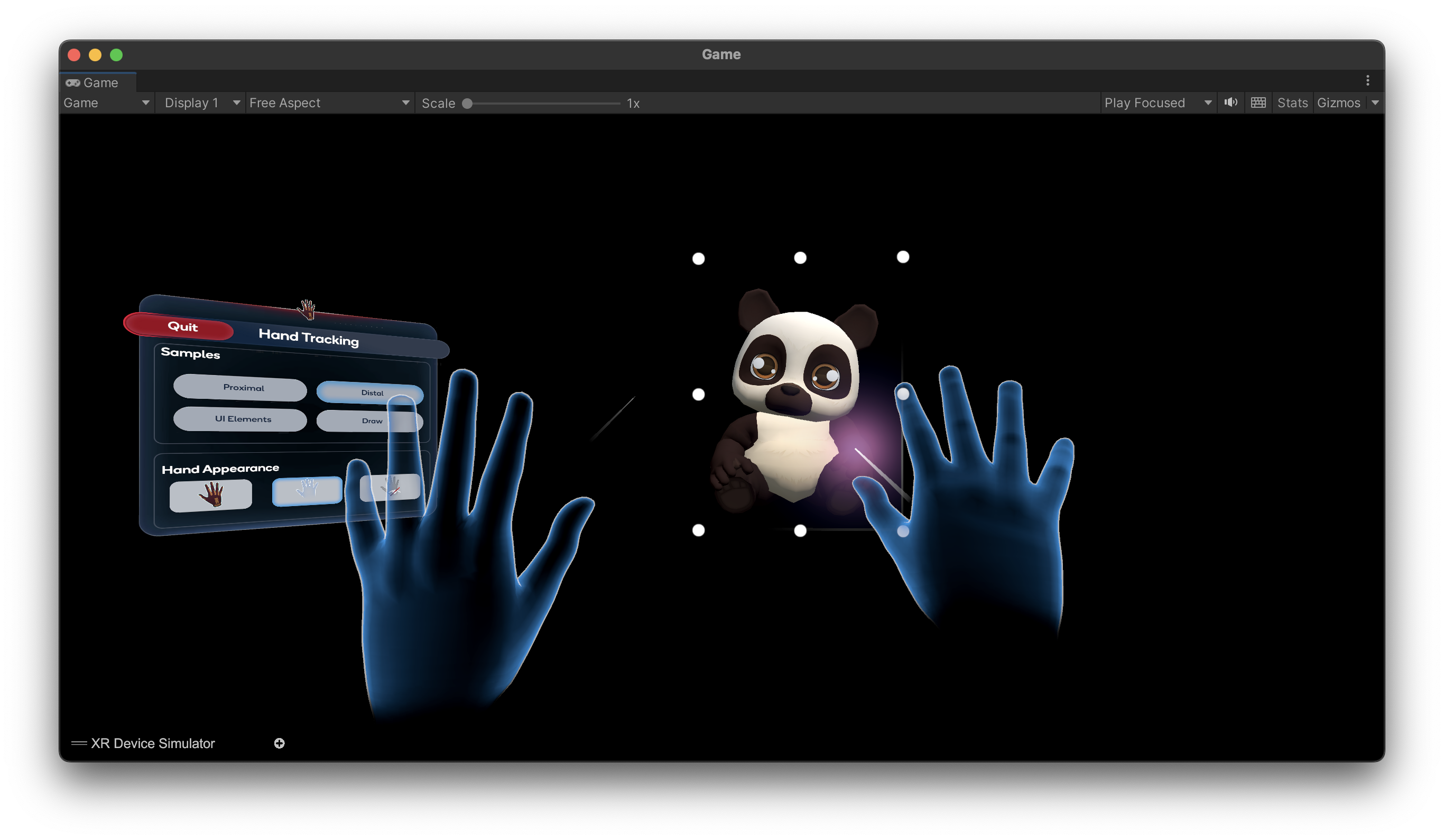

Distal

The panda is interactive when the user targets it with raycast coming from their hand. By making a pinch, it can move, rotate and resize it thanks to the Control box component.

UI Elements

For UI elements, the manipulation can be made with raycast system. There are many elements to interact with, such as radio button, checkboxes, sliders, scroll view and buttons. To manipulate the object, target it and pinch to select.

UI elements receive and translate all the events into Unity Standard. It is the link between the User action and the System reaction. Raycasted elements respond to all Unity callbacks. Please refer to Distal Interaction section to learn more about the raycast system.

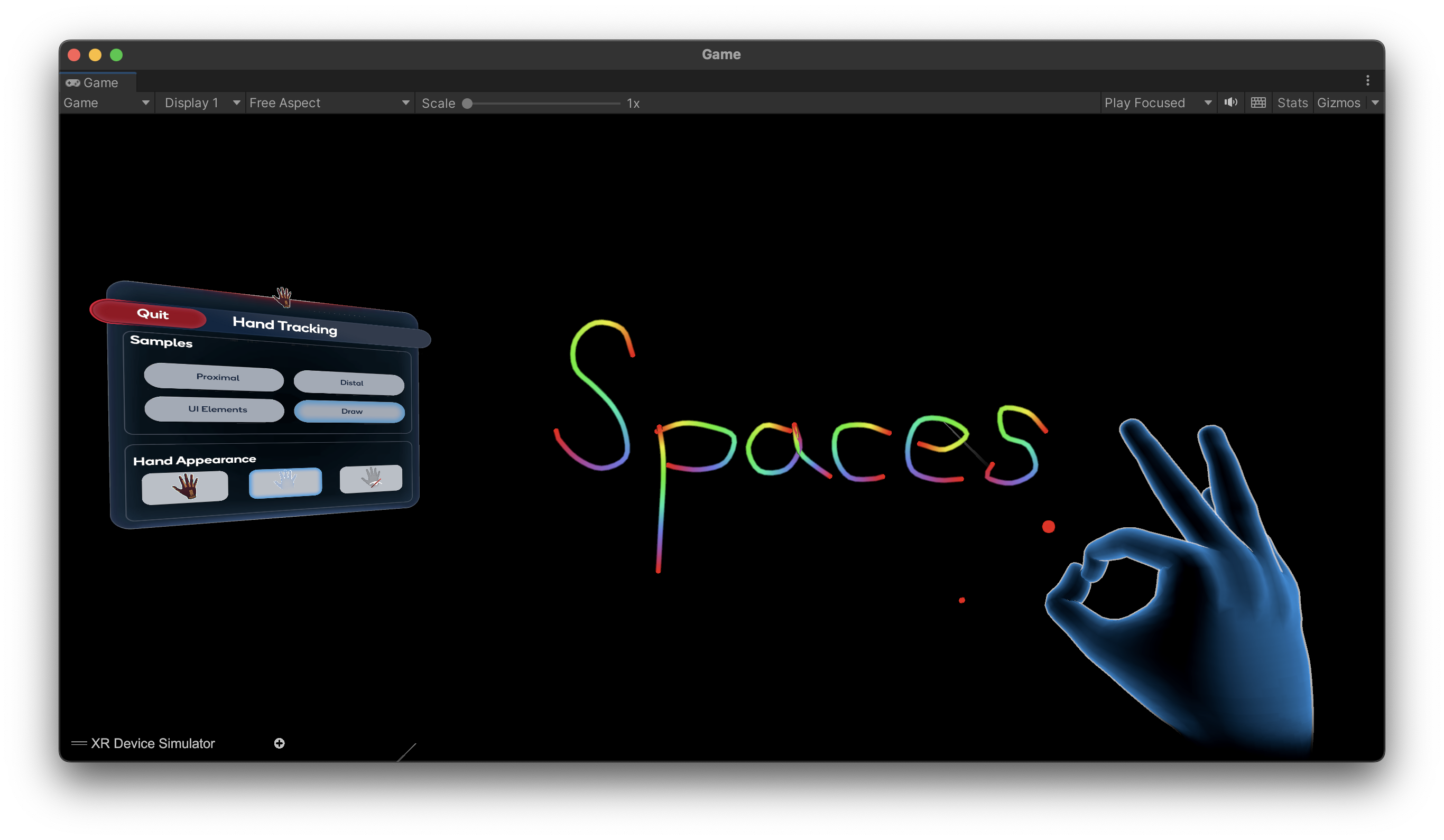

Draw

The Pinch gesture starts the drawing action and the Open-Hand gesture stops it.

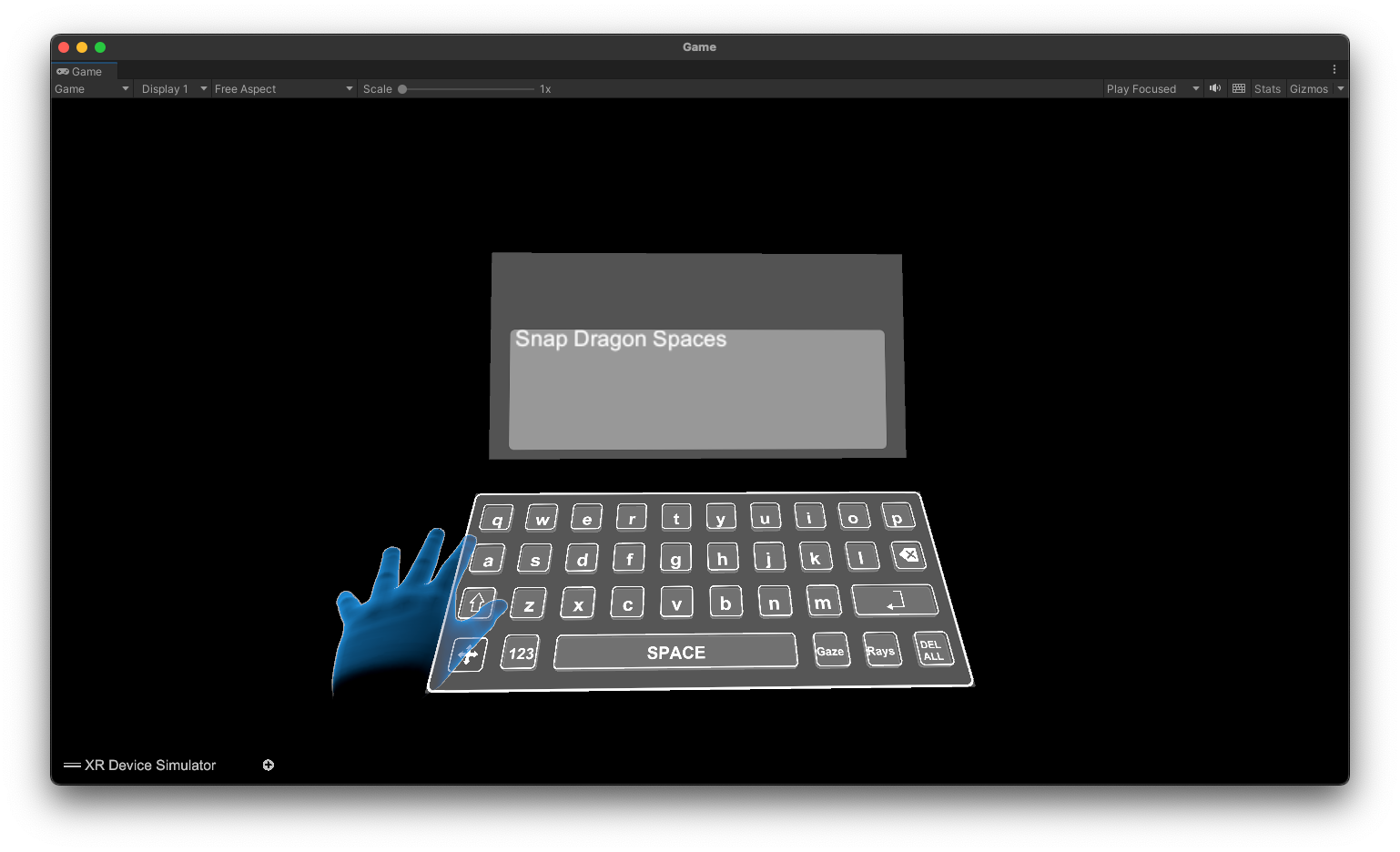

Poke (Experimental)

You can find an implementation sample of the poke in the QCHT Sample - Poke scene located in `Assets > Samples > QCHT Unity Interaction > [package version number] > QCHT Interaction Samples > Poke > QCHT Sample - Poke.

In this scene, the Poke Interactor is used in a virtual keyboard. The keyboard allows the user to type text by touching the keys with his index finger thanks to the Poke Interactor.

Each key contains a Button and a Simple Interactable. A key also has an XRPokeFollowTransform component which gives it a visual effect to simulate a pressed action, and a KeyButton to set its behavior when pressed.

It is also possible to interact on the key with Distal Interactions.